Table of Contents

Try Testkube instantly in our sandbox. No setup needed.

Try Testkube instantly in our sandbox. No setup needed.

Table of Contents

Executive Summary

AI coding assistants promised to speed up software delivery and they delivered. As per a report, developers are saving almost 60% of their time on coding tasks. This further improves other velocity metrics which look incredible.

As the Engineering lead, you’re thrilled as the team was able to deliver more features this quarter.

Then the security calls out three vulnerabilities that were found - all of it in the AI-generated code which passed the existing checks. Welcome to the AI velocity paradox.

While AI coding assistants speed up the process, they affect the stability - 7.2% as per Google’s DORA report. Your testing infrastructure, CI/CD pipelines that were built for human developers can’t keep up with the volume, velocity, and patterns of AI-generated code.

So, the question here is, how do you validate AI-generated code without becoming a bottleneck?

New Speed of Development

We all know that the adoption of AI coding assistants happened fast. They didn’t just add a feature to your development workflow; they fundamentally changed the pace at which software gets written.

AI is everywhere in your pipeline, and 76% of developers rely on AI from code generation, documentation, and bug fixes to test creation. Most of them accept close to 30% of the AI suggestions and retain 88% of the generated code in their pull requests. This isn’t a pilot project anymore; it’s the way your team works.

AI coding assistants have brought down the time for coding and boilerplate generation from hours to minutes. And this led to a massive increase in the volume of PRs generated.

But while everything else was gaining pace, your code reviews stayed flat, in fact the amount of code requiring review doubled. Your existing test infrastructure and processes struggled to keep pace with the AI generated code.

The bottleneck wasn’t “how was can we code?” anymore, it was “how fast can we validate that this code is safe to ship to production?”

The Quality Problem Nobody Talks About

While AI accelerates coding tasks, it introduces failure modes that differ from those of human developers. It’s important to understand the quality drawbacks of using AI coding assistants to attend to the validation problem.

Security Vulnerabilities at Scale

A research analyzed more than 100 Large Language Models (LLMs) and found that 45% of the AI-generated code contains OWASP Top 10 security vulnerabilities. Not only that, but many of them also generated code with design issues. There have also been reports of the tools themselves introducing risk, like leaking secrets in repositories.

Why AI Code Fails Differently

AI coding assistants generate syntactically correct code, which unfortunately is contextually wrong. It doesn’t fully understand the architecture of your application, business rules, or security model. For example:

- SQL injection vulnerabilities may arise because the model has learned from insecure examples during training.

- Authentication checks might be omitted if the prompt doesn’t explicitly request them.

- Validation logic can be missing because the model tends to optimize for brevity and functional output, not robustness or security.

The edge cases are where AI coding assistants fail miserably. Experienced human developers consider failure scenarios: what if the database is down? What if the input is incorrect? What if there’s a network failure? AI considers what statistically comes next in the code - not what can go wrong in production.

The Trust Gap

Further, AI now writes roughly 25% of production code, and most organizations have found vulnerabilities related to AI generated code. Yet the code review process hasn’t adapted to the new ways of coding. Teams now get exponentially more code to examine with the same capacity. This leads to reviews becoming more superficial and critical flaws slip through because the team is overwhelmed.

Continuous Validation: The Answer

When AI starts generating code, you need to match its speed and that cannot be achieved by doing more code reviews. It requires an automated and continuous validation approach.

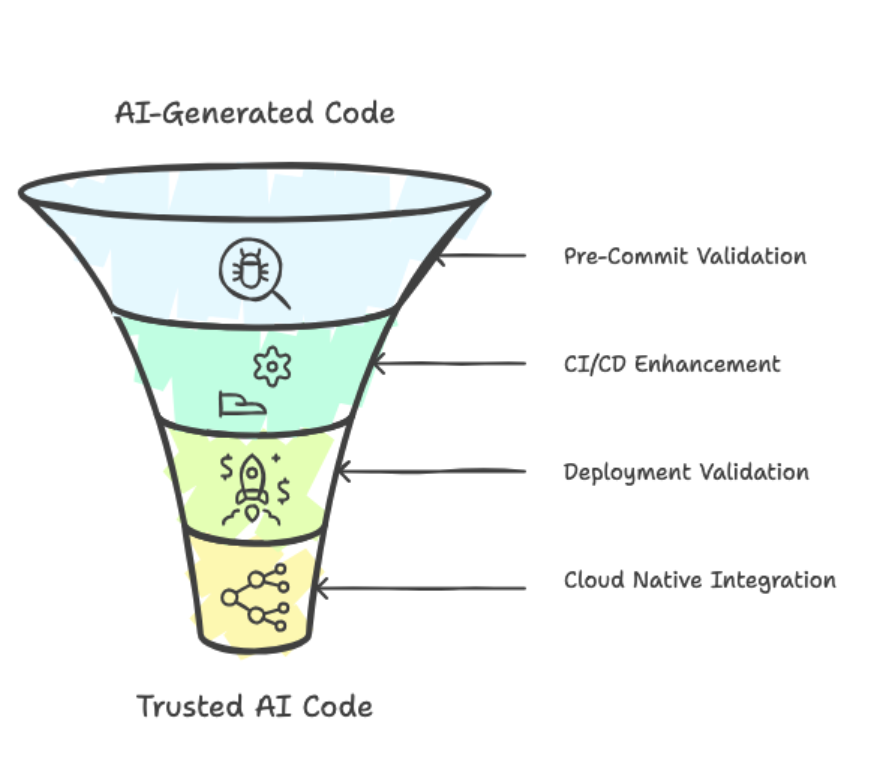

The Architecture

Validation must happen at every stage right from pre-commit, pre-merge, deployment to production and not only as the final gate before release. Traditional CI/CD pipelines enable testing code in batches; continuous validation means testing constantly, with AI-aware quality gates designed for AI-generated code.

Pre-commit Validation

This layer intercepts issues even before the code enters version control. Static analysis tools look for AI code patterns known to cause problems. Security scanning (SAST) scans specifically for AI-generated vulnerability like input validation or weak authentication. Automated dependency checks can look for hallucinated packages - the non-existent libraries that AI can reinvent at times.

CI/CD Enhancement

The CI/CD pipeline must scale to match the volume of AI-generated code - unit tests for functions, integration tests for services and end-to-end tests for workflows. Contract tests validate API changes don’t break production. Performance benchmark tests can catch resource-intensive code that AI sometimes generates. Lastly, a quality gate blocks merges if anything fails in the pre-commit stage or if coverage drops

Deployment Validation

You can ensure deployment safety with the deployment validation layer. Instead of exposing your new changes to entire traffic, you can use canary deployments with automated health checks to test changes on a small set of users first. Progressive rollout practices help monitor real-time metrics and can trigger automated rollback actions on failure signals – even before your customer notices it. For Kubernetes environments, you can use admission controllers that enforce security policies at cluster level.

Cloud Native Integration

All your continuous validations tools and workflows must integrate well with your existing stack: GitHub Actions, GitLab CI, ArgoCD etc. You can use service mesh integrations for Istio, Linkerd for runtime checks and traffic management while observability platforms like Prometheus and Grafana provide quick feedback on code behavior in production.

Building the Trust framework

Move from “trust by default” to “zero trust”. Every line of AI generated code proves itself to be safe before moving to the next stage of the development life cycle. Enable fast feedback loops for developers so that they can fix issues while the context is fresh. Track DORA metrics like MTTR, deployment frequency, and change failure rates to validate if your changes are working or not.

The Culture Layer

Changes in the technical layers are good, but you need cultural changes to reap the benefits. Developers need to adopt newer ways of development while using AI coding assistants. They should be able to prompt AI to generate testable, modular code and ask it to write unit tests for its own code. They should be able to deploy agents at critical places that can help measure testability, code quality, enforce coverage standards and block merge requests if any of these fails.

Conclusion: Speed Without Safety Is Unsustainable

Over 20 million developers use AI coding assistants, and the adoption is only going to increase at an astonishing rate. AI development velocity isn’t a trend anymore, it’s the baseline for competitive software development.

But as they saw with great power comes great responsibility, velocity without validation is a debt you’ll pay in production incidents and security breaches. Traditional testing infrastructure designed for human coding and reviews aren’t able to keep up with the new pace of AI generated code.

Continous validation bridges this gap. It matches AI’s code generation speed with automated AI-aware checks at every stage.

The next step is clear: build a continuous validation framework that runs as fast as AI writes code. Implement pre-commit checks and quality gates that block vulnerable code. Use progressive delivery methods to validate your changes with a small set of users and implement a culture to match the speed of AI.

Testkube provides continuous testing infrastructure for cloud-native applications. Run automated tests at any stage using Test Workflows - validating code quality, security, and performance before deployment. Build the validation framework that matches AI development speed. Get started with Testkube.

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.

.png)