Table of Contents

Try Testkube instantly in our sandbox. No setup needed.

Try Testkube instantly in our sandbox. No setup needed.

Table of Contents

Executive Summary

Modern day applications have evolved far beyond monolithic architecture. They don’t comprise a single codebase but are rather a collection of multiple services owned by different teams and deployed independently.

While it brings flexibility and scalability, it also introduces complexity, and this is where traditional testing is ineffective.

Unit tests don't catch integration issues. Integration tests can't simulate the fully distributed environment. End-to-end tests become slow and flaky. And when a test fails, figuring out which service caused the issue becomes an investigation.

Microservices testing demands new strategies. These include contract tests to prevent breaking changes, chaos engineering to validate resilience, and ephemeral environments that mirror production.

In this blog post, we’ll share a comprehensive microservice testing strategy which will cover aspects from cloud native testing in Kubernetes to AI powered approach along with answers to common testing challenges in distributed systems.

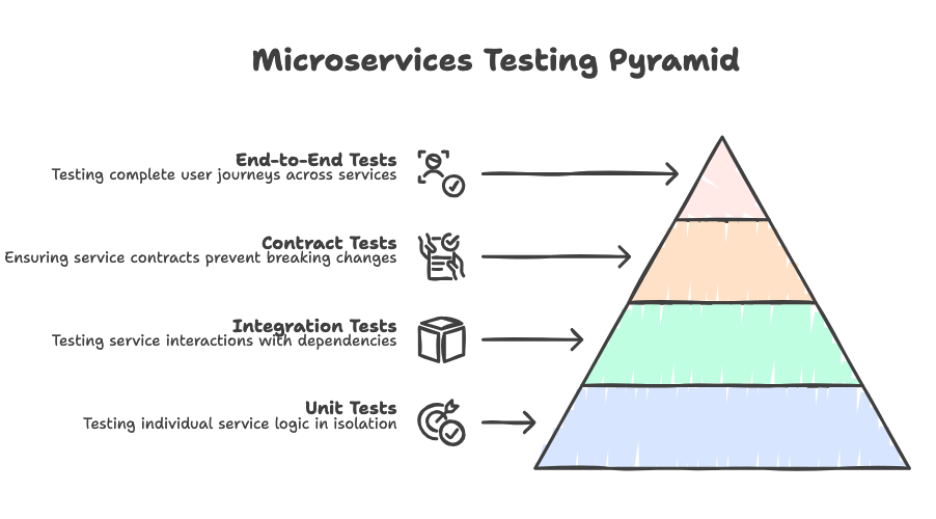

Microservices Testing Pyramid

A testing pyramid is a blueprint that guides how you should distribute your testing efforts. It suggests that you should have many fast, cheap unit tests at the base, a few integration tests in the middle, and only a handful of slow and expensive end-to-end tests at the top. This ensures faster feedback, manageable tests and better resource usage.

This pyramid holds true for microservices as well, but with a new layer. The distributed nature of microservices introduces contract tests that sit between the integration and end-to-end tests. These address the challenge of service-to-service communication without the need for all services to run simultaneously.

Let us look at all the layers from a microservices context.

Unit Tests

These foundational tests focus on individual service logic without external dependencies. Each microservice should have a comprehensive unit test that validates its business logic, validation rules and other internal functions. Teams can make use of stubs and mocks to simulate databases, APIs and keep the tests fast and isolated. The goal here is speed and faster feedback with an aim for high coverage as these tests are often cheap to write and maintain with an ability to catch logic errors early in the development cycle.

Integration Tests

Integrations tests validate how services integrate and interact with its direct dependencies like databases, external APIs etc. Unlike unit tests, these use real dependencies rather than mocks to test how your service handles database transactions, message serializations and connection failures. The goal of an integration test is to ensure that your service plays well within its infrastructure dependencies – you're not testing service to service communication yet.

Contract Tests

Contract tests play a crucial role in microservices by ensuring that the expectations between service consumers and providers are clearly defined and consistently met. For instance, when service A calls service B, a contract test ensures that service B’s response matches what service A expects. This prevents the common scenario where a provider team unknowingly breaks downstream services.

End-to-end Tests

These tests validate the complete user journeys across multiple services simulating real user behavior from frontend to backend. These are valuable tests; however expensive as they require spinning up multiple services, are slow to execute and prone to flakiness. You should use them for critical business paths like user registrations, order checkout, or other core workflows.

Testing Strategies for Microservices

While the testing pyramid gives guidance on how to plan your tests, there are specific strategies that address the distributed system challenges. These approaches focus on how to test effectively in cloud native environments, handle service dependencies, validate resilience, and ensure performance at scale.

Service Virtualization and Test Doubles

Deciding when to use a real service versus mocks/stubs is crucial to balance speed and accuracy of testing.

- Use mocks and stubs for 3rd-party APIs, or services with rate limits and costs

- Leverage service virtualization to create lightweight replicas of when services are unstable or unavailable.

- Use real dependencies for critical integrations that you can control and manage.

- Ensure test doubles accurately reflect real service behavior, including error responses and edge cases

Testing in Kubernetes

Microservices that are ultimately deployed in Kubernetes infrastructure need to be tested accordingly. Production-like testing in Kubernetes helps catch issues that local environments miss, from DNS and network policy errors to resource constraints.

- Ephemeral test environments spin up isolated service copies for each test run or pull request.

- Operators and custom resources orchestrate complex scenarios, managing dependencies, and cleanup.

- Validate deployment manifests, health checks, readiness probes, and resource configurations.

- Test pod-to-pod communication, service discovery, and network policies in realistic conditions.

Contract Testing

Consumer-driven contract testing catches breaking changes before deployment by validating service agreements independently without requiring all services to be up and running.

- Consumers publish contracts defining API expectations - request format, required fields, response structure

- Providers run contracts as tests in CI pipelines, failing builds if they violate expectations

- Schema validation catches field renames, type changes, or missing required fields

- API versioning becomes testable - validate backward compatibility across versions

Chaos & Resilience Testing

Chaos testing helps validate your system’s resilience by intentionally injecting realistic failure scenarios.

- Simulate network latency, timeouts, and pod crashes to test recovery mechanisms.

- Validate retry and fallback logic works correctly without overwhelming downstream services and in situations when dependencies become unavailable.

- Test circuit breakers open under load and close when services recover.

- Use tools like Chaos Mesh in Kubernetes to inject failures programmatically.

Performance & Load Testing

Test each individual service performance to prevent bottlenecks from compounding across distributed systems and reaching production.

- Establish performance baselines for response times, throughput, and resource usage

- Use distributed tracing to pinpoint slow services or database queries during degradation

- Load test with realistic data volumes, network latency, and resource constraints

- Test in production-like infrastructure that mirrors real deployment conditions

AI-Powered Testing for Microservices

AI is rapidly evolving and changing the way we build, test and deploy our applications. From a microservices testing perspective, AI helps with generating tests and analyzing failure patterns across distributed systems. It doesn’t essentially replace the existing testing tools or strategies but compliments them by reducing manual effort and uncovering issues that might slip through when using conventional approaches. Here’s how you can use AI in cloud native testing workflows.

Test Generation & Maintenance

Writing and maintaining tests can be time-consuming, especially as services evolve. AI tools can analyze your codebase and understand your API specification to automatically generate test cases, significantly reducing the time spent on writing boilerplate tests.

- Generate unit and integration tests from code analysis and existing patterns

- Auto create contract tests from OpenAPI specifications

- AI can suggest additional test cases and edge cases

- Automatically update tests when APIs or contracts change, reducing the maintenance overheads

AI-driven Test Analysis and Debugging

Debugging test failures in a distributed system are complex as correlated logs and metrics can be spread out across multiple services and infrastructure components. When tests fail across services, AI can help connect the dots and correlate data, find patterns, and identify root causes faster than manual analysis.

- Root cause analysis that traces failures across service boundaries and dependencies

- You can use natural language for test results and logs

- Correlate test failures with deployment changes, infrastructure events, and system metrics

- AI agents surface relevant context from logs, traces, and metrics automatically

AI-Powered Scenario Generation

One of the powerful uses of AI in testing is its ability to learn from real-world usage. It can analyze production traffic patterns and user behavior to generate realistic test scenarios that might be missed if this was done manually.

- Generate test scenarios from real-world production traffic patterns and API usage

- Identify missing test coverage by analyzing code paths and production data

- Edge case discovery from anomalies and unusual patterns in production

- Continuously evolve test suites based on how services are being used

Practical Implementation with AI Tools

The best part is that you don’t need to overhaul your existing system completely to use AI for testing. You can start small by integrating AI into your existing CI/CD pipelines or IDEs as assistants.

- MCP servers (like Testkube's MCP) integrate AI capabilities into testing workflows and CI/CD pipelines

- GitHub Copilot and similar AI coding assistants help write tests faster during development

- AI agents can continuously improve test suites, suggest refactoring, and identify gaps

- LLMs generate test documentation, explain test failures, and facilitate knowledge sharing

Best Practices for Microservices Testing

Microservices testing isn’t just about writing tests but building a sustainable testing culture that scales with your architecture. Here are some best practices that have emerged from our discussion with various teams running distributed systems in production.

Shift Testing Left (and Right!)

Testing shouldn't happen only in dedicated QA environments, but teams must try to push testing earlier in development and extend it into production for complete coverage.

- Run tests early in CI/CD pipelines, ideally on every commit or pull request

- Pre-merge validation catches issues before they reach the main branch

- Automated testing in development environments creates faster feedback loops to the developers

Test Independence and Parallelization

Writing independent tests that can run in any order and in parallel dramatically reduce feedback time and improve overall reliability of tests executions

- Each test should be independent - no shared state or execution order dependencies

- Parallel test execution, especially in Kubernetes maximizes resource usage and speeds up CI pipelines

- Use namespace isolation to run multiple test suites simultaneously without conflicts

Test Data Management

Managing test data across distributed services and databases is one of the trickiest aspects of microservices testing and requires a careful strategy.

- Each service should manage its own test data - avoid coupling through shared test databases

- Implement proper data cleanup between test runs to prevent state pollution

- Leverage synthetic data for privacy and compliance in test environments

Observability for Testing

Observability is not just for your applications. Treating tests as observable systems helps you understand failures quickly and validate that monitoring works before production deployment.

- Leverage metrics, logs, and distributed traces during test execution for debugging

- Use observability tools to understand why tests fail, especially in distributed scenarios

- Correlate test results with system behavior - CPU spikes, memory usage, network latency

Version Compatibility Testing

Services deploy independently in microservices architectures, making version compatibility testing critical for preventing breaking changes.

- Test backward and forward compatibility between service versions explicitly

- Validate that old clients work with new service versions and vice versa

- Leverage canary deployments and progressive delivery strategies to test new versions with production traffic

Conclusion

Testing microservices requires a multi-layered approach - from unit tests to contract tests to strategic end-to-end validation. The key is finding the right balance between coverage, speed, and maintenance cost while building the confidence to ship frequently and safely.

Start with the basics: establish the testing pyramid, implement contract tests to prevent breaking changes, and test in production-like environments. Explore chaos engineering to validate resilience and use performance testing to catch bottlenecks before they reach production.

And don’t ignore the potential of AI. Whether it’s generating tests, analyzing failures, or surfacing edge cases from production data, AI can be a powerful ally in your testing workflow - helping teams move faster without compromising quality.

Ultimately, the goal is to create a testing culture that scales with your system, supports rapid development, and gives your team the confidence to innovate.

Frequently Asked Questions

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.