Table of Contents

Try Testkube instantly in our sandbox. No setup needed.

Try Testkube instantly in our sandbox. No setup needed.

Table of Contents

Executive Summary

Your applications now run on Kubernetes, are deployed using GitOps, and are scaled automatically during increased load, but your tests still run the same way they did five years ago. If this sounds familiar, don’t worry, you’re not alone.

While organizations have adopted cloud native technologies to build and deploy their applications, teams still rely on traditional testing practices that can create unexpected inconsistencies between how applications are built and deployed in production.

This contradicts the reliability and speed benefits that drew you to cloud native in the first place. The solution isn't to add more tools onto your existing testing setup, but to evolve your testing practices alongside your infrastructure.

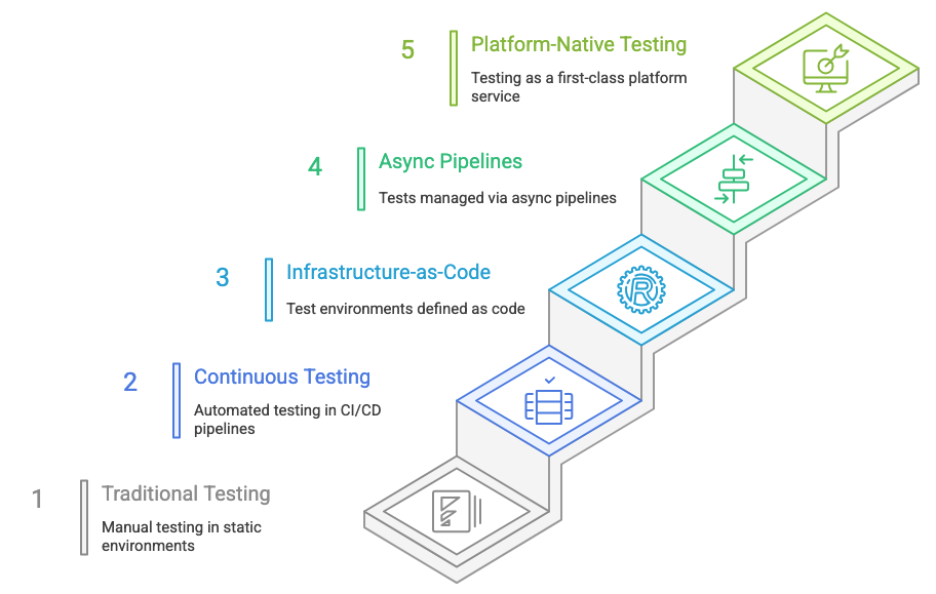

In this post, we look at a 5-stage cloud native testing maturity model that is designed to close that gap by evolving your testing practices alongside your cloud native infrastructure, ensuring consistency across the entire software lifecycle.

Cloud Native and Its Testing Implications

Before diving into cloud native testing, it’s important to understand what it means to be cloud native and how it fundamentally changes your testing strategy. Cloud native isn’t just about running applications in containers; it's about how they are architected, built, and deployed, which creates new testing challenges and opportunities.

What Cloud Native Really Means

- Applications are built as loosely coupled services and deployed in containers rather than monolithic applications deployed on virtual machines. This enables independent scaling, deployment, and failure isolation while allowing the teams to work autonomously on each service and choose different technologies for each.

- Applications are designed to be dynamic, scalable, and resilient. They have components that can scale independently and even recover from failure automatically to survive individual component failures without total system outage.

- Infrastructure is treated as cattle and not pets. It introduces components that are ephemeral and replaceable rather than long-lived and manually maintained, ensuring consistency and eliminating manual configuration drift.

- Cloud native applications support multiple deployment options, including containerized platforms like Kubernetes, serverless platforms like AWS Lambda, and edge computing environments.

How Cloud Native Changes Testing

- Test environments must mirror production and be dynamic and distributed to ensure environment consistency instead of being simplified static setups.

- Validating infrastructure behaviors - scaling, rollback, recovery - and other operational behaviors becomes equally important as functional testing.

- Testing approaches must account for the different deployment models - tests that work for Kubernetes may not work on serverless platforms.

- Test environments and infrastructure must be defined as code using the same principles as production infrastructure.

- Apart from just functional and integration testing, testing in cloud native must also validate synchronous and asynchronous event processing that is common for cloud native applications.

The Cloud Native Ecosystem and Testing

- Container orchestration platforms like Kubernetes have become the de facto tools for managing containerized applications at scale, requiring testing strategies to validate deployment across namespaces and nodes.

- Serverless platforms like AWS Lambda, Azure Functions demand a completely different form of testing since traditional methods can’t easily replicate event-driven and stateless execution along with vendor-specific runtime environments.

- Infrastructure-as-code tools like Terraform and CloudFormation enable you to create test environments that mirror production setups, but this also requires you to test the infrastructure code itself.

- GitOps tools are Argo CD and Flux, which enable the management of test configurations and environments using the same Git-based workflows that deploy production applications.

- Cloud native observability tools like Prometheus, Grafana, Jaeger, and OpenTelemetry provide rich telemetry data, enabling new forms of testing that validate not just functional behavior but also performance characteristics

The Cloud Native Testing Maturity Model

Having understood the nuances of cloud native testing, it’s imperative to understand how to systematically evolve your current practices. Many organizations recognize the gap between their testing approaches and cloud native principles, but struggle with where to start and how to progress without disrupting existing workflows.

This cloud native testing maturity model has emerged from observing how successful organizations have evolved their testing practices over time. Rather than a big bang approach to implementing cloud native testing, the most effective teams follow a predictable progression - starting with basic automation, then adding infrastructure-as-code principles, eventually reaching full platform-native testing capabilities.

The model provides a roadmap that acknowledges where most teams are today while offering a clear path forward.

Stage 1: Traditional Testing, No Cloud Native

This is the initial stage where organizations rely on manual tests and static test environments that bear little or no resemblance to their cloud native production environment. Testing activities usually focus on functional aspects of the application and little emphasis is placed on non-functional aspects like scalability, resilience, and performance receive minimal attention or are tested separately using different tools and environments. Further, test environments are shared resources that accumulate configuration drift over time.

Teams typically maintain dedicated QA environments that are manually provisioned and configured, with testing processes that evolved alongside traditional monolithic applications and haven't adapted to cloud native operational patterns or distributed system characteristics.

In terms of tools, basic CI/CD tools like Jenkins for functional test automation, manual test environment provisioning, testing frameworks focused on unit and API testing, separate performance testing tools like JMeter used sporadically, and shared test databases that create dependencies between teams and test runs.

Stage 2: Continuous Testing in CI/CD

At this stage, organizations have understood the importance of continuous testing and have implemented automated testing within CI/CD pipelines, adding shift-left practices that provide rapid feedback to developers on every code commit. Testing becomes continuous and integrated into the development workflow, but test environments still don't match cloud native production patterns. Most of the testing is still focused on validating functional use cases but has matured greatly from the previous stage.

Teams transition from manual testing by integrating test automation into their existing CI/CD infrastructure and establishing testing as an automated checkpoint that prevents broken code from progressing through the pipeline. The focus shifts from manual execution to automated execution where failing tests immediately halt the deployment process, requiring fixes before code can advance. Organizations invest in test automation frameworks and start treating their tests as code and follow version control and code review practices.

Organizations opt for modern CI/CD platforms like GitHub Actions, GitLab CI, or Azure DevOps that come with comprehensive test automation suites, containerized test execution for consistency, automated security scanning tools like SAST/DAST integrated into pipelines and basic performance testing with simplified load profiles. Test results are part of the pipeline execution reports and visible to the entire organization.

Stage 3: Infrastructure-as-Code Testing

This is where test environments are defined as code using the same cloud native patterns and tools that teams use to manage production infrastructure, ensuring consistency between testing and production environments. Teams adopt container-native test execution and platform-specific testing frameworks that match their deployment models. Non-functional testing becomes more sophisticated as improved and realistic test environments enable proper load testing, chaos engineering experiments, and security validation that reflects actual production conditions.

Organizations evolve from CI/CD automation by treating their test infrastructure with the same infrastructure-as-code principles they've adopted for production systems. Teams begin containerizing their test execution environments and use declarative configuration files to define test infrastructure that can be version-controlled, reviewed, and automatically provisioned. The shift involves moving from shared, persistent test environments to ephemeral, on-demand test environments that are created for specific test runs and destroyed afterward.

Teams leverage existing tools like Terraform and vCluster for test environment definitions and provisioning, Testcontainers for integration testing with real databases and services, Docker and Kubernetes for container-native test execution, and chaos engineering tools like Chaos Monkey for resilience testing. Test environments become ephemeral and can be provisioned automatically with a new PR.

Stage 4: Testing in Async Pipelines and Progressive Delivery

At this stage, tests, test configurations, and test environments are managed through asynchronous, declarative workflows where testing becomes deeply integrated with deployment processes. GitOps represents one popular approach where all test assets are version-controlled and managed via Git. Non-functional testing reaches maturity as teams can reliably test performance, security, and resiliency in production-like environments, with test policies and compliance requirements enforced through code rather than manual processes.

Organizations adopt GitOps principles for all test assets, treating test workflows, configurations, and policies as declarative resources managed via Git. Teams implement Kubernetes-native testing solutions that integrate directly with their existing GitOps deployment pipelines, allowing test execution to be triggered by the same Git events that deploy applications.

Teams opt for GitOps controllers like Argo CD or Flux to manage test environments and configurations, Testkube for Kubernetes-native test execution and workflow orchestration and policy-as-code tools like OPA/Kyverno for test governance and compliance validation. All test artifacts like logs and reports become auditable through Git history, supporting compliance requirements and providing complete traceability of testing decisions.

Further, progressive delivery patterns become central to this stage, with testing integrated directly into canary deployments, blue-green rollouts, and feature flag workflows. Tools like Argo Rollouts and Keptn orchestrate gradual traffic shifts while continuously validating application behavior through automated tests and quality gates. These act as automated decision points that promote or rollback releases based on test results, performance metrics, and business KPIs.

This shift means testing no longer just validates before deployment but continuously validates during and after deployment.

Stage 5: Platform-Native Testing

When organizations mature, they integrate testing as part of their internal platform. Testing becomes a first-class platform service offered through internal developer platforms, providing self-service testing capabilities. Teams consume testing as a standardized platform capability offering unified workflows that work across Kubernetes, serverless, and edge environments. This enables developers to easily consume testing services without deep infrastructure knowledge.

Organizations evolve from GitOps integration by building internal developer platforms that have testing as a core service offering. Teams create unified testing experiences that hide platform-specific complexity while enabling developers to test effectively regardless of their target deployment model. This involves building platform APIs, self-service interfaces, and standardized testing workflows that work consistently across different cloud native technologies and organizational teams.

Tools like Backstage help you build internal developer portals that can integrate testing-as-a-service as plugins or service catalogs offerings. Such platforms also provide automated test environment lifecycle management through custom operators, and unified developer experiences that provide consistent testing workflows.

Implementation Roadmap: Your Cloud Native GitOps Testing Journey

Understanding the maturity stages is valuable but knowing where you stand and how to progress is critical. We’ll share a roadmap that provides concrete steps and measurable success criteria for each transition, helping you plan your evolution systematically.

Stage 1→2: From Manual to CI/CD

Key Actions:

- Integrate automated test suites into existing CI/CD pipelines as mandatory deployment gates

- Implement shift-left practices with pre-commit hooks and IDE-integrated testing

- Containerize test execution environments for consistency across developer machines

- Create dashboards that provide visibility into test results and deployment pipeline status

Success Metrics:

- Test execution frequency: Measure tests per day/week - should increase dramatically as testing becomes automated rather than periodic manual runs, indicating successful shift to continuous practices

- Mean Time to Feedback (MTTF): Track time from code commit to test results - target under 10 minutes shows developers get rapid feedback, enabling faster iteration and bug detection

- Pipeline success rate: Monitor percentage of builds passing automated tests on first run - improving rates indicate better test coverage and developer adoption of shift-left practices

Stage 2→3: From Continuous Testing to Infrastructure-as-Code Testing

Key Actions:

- Adopt infrastructure-as-code tools to define test environments using the same patterns as production

- Transition from shared, persistent test environments to ephemeral, on-demand environments

- Begin testing infrastructure components alongside application functionality

- Establish realistic non-functional testing with proper load profiles and chaos experiments

Success Metrics:

- Environment drift incidents: Track differences between test and production environments - should approach zero as infrastructure-as-code ensures consistency, eliminating "production-only" bugs

- Test environment provisioning frequency: Measure how often new environments are created - increase indicates teams are using ephemeral environments rather than shared, long-lived instances

- Time to reproduce production issues: Track how quickly production bugs can be replicated in test environments - reduction demonstrates improved environment parity

Stage 3→4: From Infrastructure-as-Code Testing to Progressive Delivery

Key Actions:

- Implement async pipelines and progressive delivery workflows for managing test configurations, environments, and policies alongside application deployments

- Adopt test orchestration tools like Testkube to execute tests within the same clusters as applications

- Integrate test execution with existing GitOps deployment pipelines using the same Git-based triggers and workflows

- Establish policy-as-code for test governance using tools like OPA/Gatekeeper to enforce testing standards

Success Metrics:

- Test-production environment parity: Measure configuration differences between test and production clusters - should reach near-zero as tests run in identical Kubernetes environments with same networking, storage, and security policies

- GitOps test workflow adoption: Track percentage of test configurations managed through Git workflows - increase shows successful transition from CI/CD-driven to GitOps-driven testing

- Policy compliance rate: Monitor automated enforcement of testing policies before deployments - high rates indicate effective governance through code rather than manual oversight

Stage 4→5: From GitOps Integration to Platform-Native Testing

Key Actions:

- Build internal developer platforms that expose testing as standardized service offerings through APIs and self-service interfaces

- Create unified testing experiences that work consistently across Kubernetes, serverless, and edge deployment models

- Implement platform-level testing governance with automated compliance and standardized workflows across all teams

- Establish platform metrics and observability to track testing adoption, developer productivity, and platform reliability

Success Metrics:

- Platform testing adoption rate: Track percentage of teams using platform testing services versus custom solutions - high adoption indicates successful abstraction and value delivery

- Time to productive testing: Measure how quickly new teams can implement comprehensive testing - reduction shows effective platform onboarding and self-service capabilities

- Testing standardization compliance: Track adherence to organization-wide testing policies and practices - high rates show effective platform-driven governance without manual oversight

Common Pitfalls in Cloud Native Testing Evolution

Skipping Maturity Stages

Organizations are often tempted to jump directly from traditional testing to advanced GitOps or platform-native approaches without establishing foundational practices like continuous testing and infrastructure-as-code. This leads to complex implementations that teams can't maintain or understand, ultimately resulting in regression back to manual processes.

Over-Engineering Early Stages

Teams frequently introduce unnecessary complexity in early stages by adopting enterprise-grade tooling and processes before establishing basic automation fundamentals. This interferes with the team’s skills and existing processes to create overhead that slows progress and discourages adoption among developers who just want reliable, fast feedback.

Ignoring Platform-Specific Requirements

Organizations assume testing approaches that work for Kubernetes will automatically work for serverless or edge environments, leading to inappropriate tooling choices and testing strategies that don't match the operational characteristics of different cloud native platforms.

Neglecting Non-Functional Testing Evolution

Teams successfully automate functional testing but fail to evolve non-functional testing practices like performance, security, and resilience testing to match their cloud native infrastructure, missing critical operational validation that only becomes apparent in production.

Next Steps: Building Your Cloud Native Testing Strategy

The gap that we discussed at the beginning of the article between cloud native infrastructure and outdated testing practices represents one of the biggest missed opportunities in modern software delivery. This 5-stage maturity model provides a systematic path to close that gap, ensuring your testing practices evolve alongside your cloud native adoption.

Success lies in progressing gradually through each stage rather than attempting dramatic transformations. Start by assessing where your organization currently sits - most teams find themselves between Stage 1 and Stage 2 - then focus on achieving the success metrics for your next stage before advancing further.

Ready to accelerate your cloud native testing journey? Connect with our team to discuss how Testkube can help you implement Kubernetes-native testing and advance through the maturity stages. Join the conversation in our community Slack or schedule a demo to see how organizations are successfully evolving their cloud native testing practices.

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.