Table of Contents

Try Testkube instantly in our sandbox. No setup needed.

Try Testkube instantly in our sandbox. No setup needed.

Table of Contents

Executive Summary

Your test just failed again. Same code. Same deployment. You re-run the job, and it passes.

Sound familiar?

Whether your tests run in a CI/CD pipeline or triggered manually, you've likely been caught in this frustrating loop before. Welcome to the world of flaky tests. But here's the thing: although flakiness often stems from the tests themselves - the culprit can also be found elsewhere

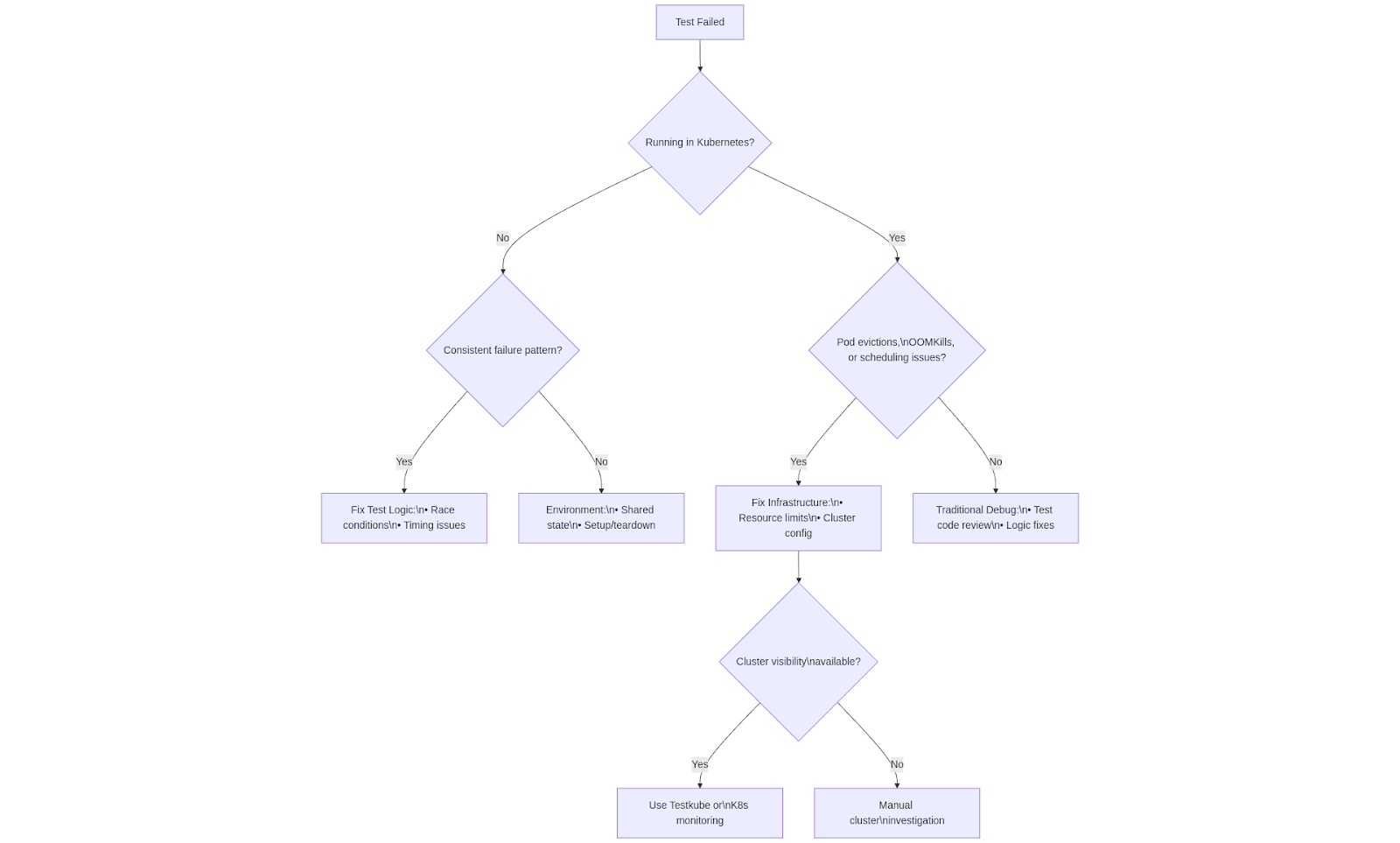

Traditional flaky test debugging follows a predictable pattern. You examine your test logic, hunt for timing issues, and refactor problematic assertions to make them more resilient to unforeseen (but still mostly valid) behaviour in your application or service under test. Sometimes this works. Other times, you're left scratching your head because the test logic looks solid. And sometimes, it just needs to be muted.

If you're running tests in Kubernetes, you might be dealing with something entirely different: workflow-level flakiness caused by underlying infrastructure. Your pods are getting evicted. Your tests are hitting cluster-wide timeouts. The Kubernetes scheduler is making decisions that have nothing to do with your carefully crafted test code.

This shift toward container orchestration has introduced a new category of failures that many teams don't recognize. They're still debugging their test code when the real problem lies in how their cluster is configured or being utilized while your tests are running - dang!

Let's break down this distinction and explore how to identify and fix it.

What Are Flaky Tests?

A flaky test is one that passes or fails inconsistently without any code changes. These failures usually stem from problems in the test itself.

Common causes include:

- Race conditions

- Poorly scoped setup/teardown logic

- Timing issues or asynchronous behavior

- Shared or leaking state between tests

These problems are especially common in integration, End-to-End, and UI tests.

Real-world examples:

- Playwright or Cypress end-to-end test: Fails intermittently in GitHub Actions because the DOM takes a bit longer to load, so cy.get() can't find the element.

- JUnit database test: Occasionally fails because previous test data wasn't cleaned up properly.

- Postman test suite: Runs fine locally but fails in CI due to missing environment variables or hitting an API rate limit.

- Selenium UI test: Fails only when run after another test that doesn't fully reset the UI state, causing false positives or negatives.

Traditional flaky test debugging follows a predictable pattern. You examine your test logic, hunt for timing issues, and refactor problematic assertions to make them more resilient to unforeseen (but still mostly valid) behaviour in your application or service under test. Sometimes this works. Other times, you're left scratching your head because the test logic looks solid. And sometimes, it just needs to be muted.

Debugging and fixing these problems is time-consuming. You might insert sleep() statements, isolate tests, or rewrite them entirely, but fixing them isn't always as straight-forward as you hoped; how can you ensure that no other tests or processes are messing with the state of your application? How can you hedge for unforeseen timeouts without compromising the test itself? What if your test logic is solid and something else is breaking it?

The Critical Distinction: Test-Level vs Workflow-Level Flakiness

Understanding this distinction is critical for debugging and preventing flakiness in modern pipelines running on Kubernetes.

Test Flakiness Comparison

Understanding the differences between test-level and workflow-level flakiness

Flakiness Has Evolved: Workflow-Level Failures

As more teams move test execution into Kubernetes-based pipelines, a different kind of flakiness has emerged: workflow-level flakiness.

This isn't about the test code failing. It's about the infrastructure interfering with your tests.

If you're running tests in Kubernetes, you might be dealing with something entirely different: workflow-level flakiness caused by underlying infrastructure. Your pods are getting evicted. Your tests are hitting cluster-wide timeouts. The Kubernetes scheduler is making decisions that have nothing to do with your carefully crafted test code.

This shift toward container orchestration has introduced a new category of failures that many teams don't recognize. They're still debugging their test code when the real problem lies in how their cluster is configured or being utilized while your tests are running - dang!

Common scenarios:

- Kubernetes evicts your test pod due to resource limits or cluster pressure

- Your test exceeds a cluster-wide timeout and is terminated prematurely

- Pods are stuck in scheduling queues during high load

- The Kubernetes scheduler throttles test jobs due to misconfigured priorities or other services requesting resources

Another variant could be a heterogeneous set of tests or when there is a complex hierarchy of tests orchestrated into a comprehensive end-to-end test suite. For example, an output of one test is an input for another - one test's flakiness causing ripple-effects across your entire testing suite.

These failures often look like flaky tests but they aren't because of the usual reasons; they're flaky because of how Kubernetes manages workloads, not because of how your tests are written.

Why Kubernetes Makes Flakiness More Common

Kubernetes is a powerful platform for managing scalable, containerized workloads (including tests).

It enables:

- Massive parallelism for fast test execution

- Cost efficiency through autoscaling and shared infrastructure

- Environment parity with production setups

- Namespace isolation for ephemeral environments

But here's the tradeoff: when you offload your test execution to Kubernetes, you're gaining power and scalability but also complexity. If the cluster isn't configured correctly, it can introduce flakiness that has nothing to do with your test logic.

Testing Challenges in Kubernetes

While Kubernetes offers powerful orchestration capabilities, it introduces unique challenges that can turn reliable tests into unpredictable failures.

Resource Competition and Timing Issues

In shared clusters, tests compete for resources and face scheduling unpredictability. Memory-intensive tests get OOMKilled during high load. CPU-bound tests timeout on oversubscribed nodes. Pod startup times vary dramatically based on node resources and image pulls. Tests may start before dependent services are ready, or get delayed by cluster autoscaling decisions.

Networking and State Management

Kubernetes networking adds complexity that breaks tests in unexpected ways. Service discovery fails when DNS propagation is slow. Network policies block traffic that worked in development. Shared volumes cause test isolation issues, and database state bleeds between test runs in different pods.

Environment Drift and Observability Gaps

Dynamic Kubernetes environments change between test runs, introducing subtle inconsistencies. Different node configurations, varying container runtime versions, and cluster-level changes affect test behavior. Traditional testing tools struggle with distributed environments, logs are scattered across pods, and there's no clear way to distinguish between test logic failures and infrastructure problems.

These challenges explain why teams often struggle with "flaky" tests after moving test execution to Kubernetes. The tests themselves might be fine, but the execution environment has become far more complex and unpredictable.

Solving Workflow-Level Flakiness

Traditional testing tools treat Kubernetes as a black box. When your pod gets OOMKilled, preempted, or stuck in scheduling queues, they simply report "failure" without context. This leaves teams chasing ghosts, investigating test failures that aren't actually code problems.

Solving workflow-level flakiness requires a testing platform that understands both your tests and the Kubernetes environment running them. This is where Testkube makes the difference.

Testkube is a cloud-native continuous testing platform that runs tests directly in your cluster as Kubernetes jobs. By operating within your infrastructure rather than outside it, Testkube provides comprehensive visibility into both test outcomes and the underlying system health that affects them.

Infrastructure-Aware Test Execution

Testkube eliminates the guesswork around test failures by monitoring the complete execution context. When tests run as native Kubernetes jobs within your cluster, they have direct access to services and databases without network-related flakiness from external runners. More importantly, Testkube tracks the health of test pods themselves, detecting when failures stem from resource constraints, evictions, or scheduling issues rather than actual code problems.

Instead of generic "failed" status reports, Testkube provides clear classification: Passed (successful completion), Failed (genuine test failure), Cancelled (user-stopped), or Aborted (infrastructure issues prevented completion). This distinction alone saves teams countless hours of misdirected debugging.

Advanced Workflow Intelligence

Testkube's Workflow Health System goes beyond individual test results to identify patterns of instability. The platform automatically detects flaky workflows by measuring how often they oscillate between passing and failing states, helping you address reliability issues before they undermine team confidence.

Visual health indicators provide instant visibility into test suite reliability, while workflow health scoring quantifies the consistency of each test workflow by analyzing both pass rates and flakiness patterns. This data-driven approach helps you prioritize which tests need attention most urgently.

Scalable Multi-Cluster Orchestration

Modern development teams need testing that scales with their infrastructure complexity. Testkube supports namespace-scoped execution and ephemeral environments, eliminating shared-state issues that create false positives. Multi-cluster scaling with time zone support enables seamless global testing coordination, while Helm chart deployment ensures consistent automation across all environments.

Comprehensive Test Orchestration

Complex applications require sophisticated testing workflows. Testkube handles hierarchical and heterogeneous test workflows where one test's output becomes another's input, with built-in retry logic and execution branching to prevent cascade failures. Custom workflow views let you filter and group tests by repository, branch, team, or custom attributes, providing clear pathways to release decisions without information overload.

Centralized observability automatically collects logs, artifacts, and results in one place, eliminating the scattered data hunt that plagues traditional testing setups.

Flaky Test / Flaky Infrastructure Debugging Decision Tree

The Bottom Line

With Testkube, you're no longer guessing why tests fail. You get clear visibility into what broke in your workflows and whether the issue requires code changes or infrastructure attention. This clarity transforms testing from a source of friction into a reliable foundation for continuous delivery.

Best Practices for Preventing Workflow-Level Flakiness

Whether you're using Testkube or not, configuring your Kubernetes cluster properly is essential for stable test execution.

Cluster-side recommendations:

- Set accurate CPU and memory requests and limits for both your workloads and your tests

- Use pod disruption budgets to avoid random evictions

- Monitor resource usage of long-running test pods that trigger timeouts

- Apply affinity/anti-affinity rules to balance test jobs

- Configure autoscaling policies to handle testing surges

Testkube-specific strategies:

- Use Test Workflows for retries, conditional logic, and sequencing

- Scope execution to ephemeral namespaces to avoid shared-state issues

- Track test outcomes by root cause, not just pass/fail

- Monitor Resource Usage of Flaky tests to ensure adequate resource allocation

Flaky Infrastructure ≠ Flaky Tests

When your test fails, it's natural to assume the test is the problem. But in modern cloud-native environments, that's not always true. Your tests might be solid. The failure might stem from a misconfigured autoscaler, an overloaded node, or a timeout defined at the infrastructure level.

Recognizing that difference is the first step toward building reliable pipelines.

As teams adopt Kubernetes for test execution, the scope of what causes flakiness has expanded. It's not just about fixing flaky test code anymore. It's about gaining visibility into how your environment affects test outcomes. Kubernetes brings scalability and speed, but it also introduces new failure modes that you have to manage.

Testkube helps you bridge that gap. Get started today.

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.