Table of Contents

Try Testkube free. No setup needed.

Try Testkube free. No setup needed.

Table of Contents

Executive Summary

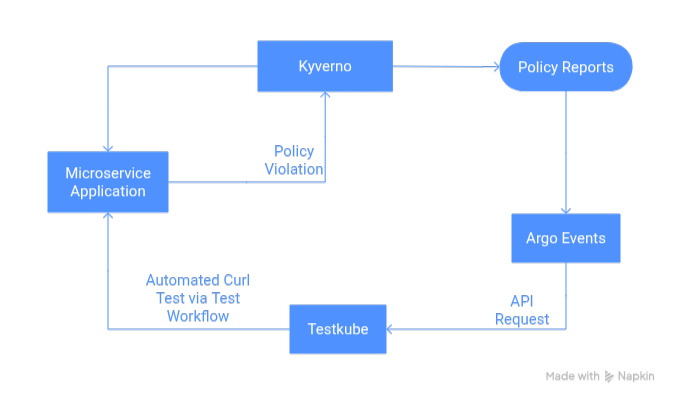

Policies are not just guardrails for cluster security and compliance. They can also act as powerful triggers for automated test execution. Testkube, a cloud native test orchestration tool, can trigger tests at any phase of the SDLC. By integrating Testkube with policy engines such as Kyverno or OPA, teams can extend policy enforcement into automated test execution.

- Validate manifests, Helm charts, or environments by running tests with Testkube alongside policy checks.

- Trigger regression or smoke tests automatically when a policy violation is detected post-deployment.

- Gain visibility into policy health using Testkube’s observability and reporting features, making it easier to track recurring violations, measure policy flakiness, and analyze trends over time.

This approach ensures that policy failures don’t just block deployments but also provide actionable feedback through targeted testing, strengthening the feedback loop in your delivery pipeline.

Real-World Use Cases of Policy-as-Code in Testing Pipelines

In the following example, a policy is implemented that checks that all the deployments in the default namespace request at least 200m of CPU and some amount of memory(non-zero). When the deployment is updated to use below the minimum value, a smoke test is run to make sure that the application is behaving as expected, even with limited resources.

In this use case, the following tool stack will be used:

- Kyverno: Deploy a resource check policy on the cluster that will validate deployment uses enough resources and generate policy reports on violations.

- Testkube: Enable smoke testing of a microservice application using curl

- Argo Events: Capture the Kyverno policy violations and trigger the smoke test using Testkube

Preparing your environment

- A Kubernetes cluster - we’re using a local Minikube cluster

- Helm installed

- kubectl installed

- Argo Events installed and set up on the cluster

- Testkube account configured

- Testkube Environment with the Testkube Agent configured on the cluster.

- A Testkube API token generated using the Testkube Dashboard.

- Kyverno installed

- Repository cloned for configuration files.

- Create a Secret for the Testkube API token in the argo-events namespace

$ kubectl create secret generic testkube-auth-secret

--from-literal=TESTKUBE_API_TOKEN="Bearer tkcapi_xxxxxxxxxxxxxxx" -n

argo-events

secret/testkube-auth-secret createdVerify the status of pods of all the tools deployed on the cluster.

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

argo-events controller-manager-75cb8f59ff-l9qzp 1/1 Running 0 3h

argo-events eventbus-default-stan-0 2/2 Running 0 3h

argo-events eventbus-default-stan-1 2/2 Running 0 179m

argo-events eventbus-default-stan-2 2/2 Running 0 179m

argo-events events-webhook-64648b5657-9fxq7 1/1 Running 0 3h

argo-events kyverno-policyreport-events-eventsource-l5qkc-fc65547b6-4jjdg 1/1 Running 0 175m

argo-events kyverno-violation-sensor-sensor-pfbr6-6c56c99796-prklb 1/1 Running 0 147m

ingress-nginx ingress-nginx-admission-create-kmrsf 0/1 Completed 0 8h

ingress-nginx ingress-nginx-admission-patch-l6fxx 0/1 Completed 0 8h

ingress-nginx ingress-nginx-controller-7c6974c4d8-zg2bp 1/1 Running 0 8h

kyverno kyverno-admission-controller-6f8d7f88d7-rs666 1/1 Running 0 6h44m

kyverno kyverno-background-controller-654dc6fccb-vkvz2 1/1 Running 0 6h44m

kyverno kyverno-cleanup-admission-reports-29274930-nfstq 0/1 Completed 0 8m13s

kyverno kyverno-cleanup-cluster-admission-reports-29274930-pqm44 0/1 Completed 0 8m13s

kyverno kyverno-cleanup-controller-699f956655-qtq79 1/1 Running 0 6h44m

kyverno kyverno-reports-controller-755cd68588-vvhbj 1/1 Running 0 6h44m

testkube testkube-api-server-fc9c8c7bb-99cw8 1/1 Running 0 7h53m

testkube testkube-nats-0 2/2 Running 0 7h53m

testkube testkube-operator-controller-manager-665f8479bc-bj2k2 1/1 Running 0 7h53m

Deploy a microservice application

We have created a microservice application that takes a username and prints a hello message with the current date and time.

:~/github/userapp$ kubectl apply -f k8s/

deployment.apps/userapp created

ingress.networking.k8s.io/userapp-ingress created

service/userapp createdFor this use case, ingress is enabled on the Minikube cluster, and its IP is added to hosts to allow access to the application.

$ curl http://userapp.local/?name=Nova

{"message":"Hello Nova, current date and time is 2025-08-29 17:11:31"}Create Smoke Test in Testkube

Testkube provides the capability to manage tests as code with Test Workflows. In this use case, the TestWorkflow will trigger a curl test to the application endpoint.

apiVersion: testworkflows.testkube.io/v1

kind: TestWorkflow

metadata:

name: smoke-nova

namespace: testkube

labels:

argoevent: "true"

spec:

steps:

- name: Run curl

container:

image: curlimages/curl:8.7.1

shell: curl http://userapp.local/?name=novaThe TestWorkflow can be created using the Testkube Dashboard, as shown below.

You can verify using Testkube CLI that the TestWorkflow `smoke-nova` is created in Testkube.

$ testkube get tw smoke-nova

...

Test Workflow:

Name: smoke-nova

Namespace: testkube

Created: 2025-08-29 19:29:58 +0000 UTC

Labels: argoevent=trueTo trigger the TestWorkflow from ArgoEvents, check the API documentation and get the API for TestWorkflow execution.

Configure RBAC

For this integration, you need to configure some roles and role bindings to allow access tothe Kyverno Policy Reports resource. These are available in the rbac directory on the repository.

:~/github/userapp$ kubectl apply -f rbac/

clusterrole.rbac.authorization.k8s.io/argo-events-policyreport-reader created

clusterrolebinding.rbac.authorization.k8s.io/argo-events-policyreport-binding created

clusterrole.rbac.authorization.k8s.io/kyverno-admission-reports created

clusterrolebinding.rbac.authorization.k8s.io/kyverno-admission-reports created

clusterrole.rbac.authorization.k8s.io/kyverno-reports-controller-complete created

clusterrolebinding.rbac.authorization.k8s.io/kyverno-reports-controller-complete created

role.rbac.authorization.k8s.io/kyverno-reports-controller-namespace created

rolebinding.rbac.authorization.k8s.io/kyverno-reports-controller-namespace createdCreate a Kyverno Policy

The following Kyverno policy will check that a deployment in the default namespace has a memory request limit and at least requests for 200m of CPU.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-resources

spec:

validationFailureAction: Audit

background: true

rules:

- name: require-resources-in-deployment

match:

resources:

kinds:

- Deployment

namespaces:

- default

validate:

message: "CPU request must be at least 200m and memory request must be specified for all containers in a Deployment."

anyPattern:

- spec:

template:

spec:

containers:

- resources:

requests:

# memory must exist (any non-empty value)

memory: "?*"

# CPU must be >= 200m

cpu: "2??m | [3-9]??m | ????m | ?.? | [1-9][0-9]*"Apply the policy manifest on the Kubernetes cluster.

$ kubectl apply -f policies/limit-resources.yaml

clusterpolicy.kyverno.io/require-resources createdCheck for policy violations in policy reports.

$ kubectl get policyreports -A

No resources foundCurrently, there are no policy violations in the default namespace on the cluster.

Capture Kyverno Policy Violations with ArgoEvents EventSource

Policy Reports capture the policy violations and update the counter of the Fail flag. The following EventSource will check for updates to PolicyReports with an increment to the Fail counter for a policy violation.

apiVersion: argoproj.io/v1alpha1

kind: EventSource

metadata:

name: kyverno-policyreport-events

namespace: argo-events

spec:

template:

serviceAccountName: argo-events-sa

container:

resources:

requests:

cpu: "100m"

memory: "128Mi"

limits:

cpu: "500m"

memory: "512Mi"

eventBusName: default

resource:

kyverno-policy-failures:

version: v1alpha2

group: wgpolicyk8s.io

resource: policyreports

namespace: default

eventTypes:

- UPDATE

filter:

expression: |

body.summary.fail > 0 &&

any(body.results, {.result == "fail"})

Apply the manifest on the cluster to configure EventSource.

$ kubectl apply -f argo-events/event-source.yaml

eventsource.argoproj.io/kyverno-policyreport-events createdTrigger Testkube API with ArgoEvents Sensor

The sensor checks for the events, and based on the events matched, it triggers a response. This response could be a log addition or sending an API request. In this use case, when the policy violation event happens and is captured by EventSource, the Sensor will trigger the Testkube API. This will send a POST request to the Testkube Server to run the TestWorkflow.

apiVersion: argoproj.io/v1alpha1

kind: Sensor

metadata:

name: kyverno-violation-sensor

namespace: argo-events

spec:

template:

serviceAccountName: argo-events-sa

container:

resources:

requests:

cpu: "100m"

memory: "128Mi"

limits:

cpu: "500m"

memory: "512Mi"

eventBusName: default

dependencies:

- name: kyverno-violation-dep

eventSourceName: kyverno-policyreport-events

eventName: kyverno-policy-failures

triggers:

- template:

name: trigger-testkube

conditions: "kyverno-violation-dep"

http:

url: http://api.testkube.io/organizations/tkcorg_b8ddc820d4919590/environments/tkcenv_94cb6305570f69bd/agent/test-workflows/smoke-nova/executions

payload:

- src:

dependencyName: kyverno-violation-dep

dataKey: body.metadata.name

dest: tags.PolicyViolationName

method: POST

headers:

Content-Type: application/json

secureHeaders:

- name: Authorization

valueFrom:

secretKeyRef:

name: testkube-auth-secret

key: TESTKUBE_API_TOKEN

This Sensor will also send the Policy Reports Name, which is an ID to Testkube, making it easier for you to track which resource has violated the policy.

Apply the manifest on the cluster to configure the Sensor.

$ kubectl apply -f argo-events/sensor.yaml

sensor.argoproj.io/kyverno-violation-sensor createdCheck the status of EventSource and Sensor.

$ kubectl get pods -n argo-events

NAME READY STATUS RESTARTS AGE

controller-manager-75cb8f59ff-l9qzp 1/1 Running 0 3h44m

eventbus-default-stan-0 2/2 Running 0 3h44m

...

kyverno-policyreport-events-eventsource-bvc7q-7c5cf9b856-lqlq9 1/1 Running 0 10s

kyverno-violation-sensor-sensor-lff2c-5c458ff954-zfkcb 1/1 Running 0 7sThe EventSource and Sensor are running successfully.

Verification

It’s time to make the deployment violate the policy and see if Testkube runs the test. Update the application deployment using the following command to use only 120m of CPU resources. This should violate the Kyverno policy and trigger a smoke test to see if the application is still accessible.

$ kubectl edit deployment userapp

deployment.apps/userapp editedThis should trigger the curl test in the Testkube for the application running on the cluster.

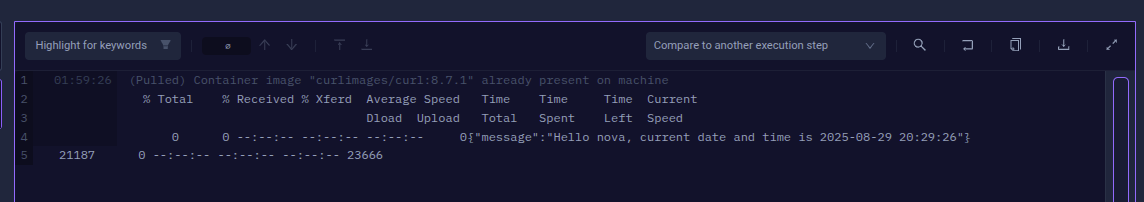

View the Test execution logs by selecting the job: “Run curl” in the Dashboard.

Check the TestWorkflow `smoke-nova` using Testkube CLI and verify that the test is triggered.

$ testkube get tw smoke-nova

...

Test Workflow:

Name: smoke-nova

Namespace: testkube

Created: 2025-08-29 19:29:58 +0000 UTC

Labels: argoevent=true

Test Workflow Execution:

Name: smoke-nova

Execution ID: 68b20da53c024a784d1264e8

Execution name: smoke-nova-2

Execution namespace:

Execution number: 2

Requested at: 2025-08-29 20:29:25.329 +0000 UTC

Disabled webhooks: false

Tags: PolicyViolationName=f492de17-68ec-42c9-883d-5a10dfddecde

Status: passed

Queued at: 2025-08-29 20:29:26 +0000 UTC

Started at: 2025-08-29 20:29:26 +0000 UTC

Finished at: 2025-08-29 20:29:29.965 +0000 UTC

Duration: 4.636s

The above output shows that TestWorkflow `smoke-nova` successfully ran the smoke test with a Status of passed. The `tags` field is updated with the name of Policy Report as `PolicyViolationName=f492de17-68ec-42c9-883d-5a10dfddecde`.

Check the Kyverno Policy Report in the default namespace.

$ kubectl get policyreports

NAME KIND NAME PASS FAIL WARN ERROR SKIP AGE

f492de17-68ec-42c9-883d-5a10dfddecde Deployment userapp 0 1 0 0 0 58mThe policy reports name match. Let us check the reason behind the violation using the Policy Reports.

$ kubectl get policyreport f492de17-68ec-42c9-883d-5a10dfddecde -o jsonpath='{.results[*].message}'

validation error: CPU request must be at least 200m and memory request must be specified for all containers in a Deployment. rule require-resources-in-deployment[0] failed at path /spec/template/spec/containers/0/resources/requests/cpu/The exact reason is easily available for you to understand the reason behind the violation, and with Testkube at hand, you can ensure that this violation has not impacted the application performance.

For troubleshooting, you can check the Sensor logs to verify if the Testkube webhook was triggered, as shown below:

$ kubectl logs -n argo-events -l sensor-name=kyverno-violation-sensor -f

{"level":"info","ts":"2025-08-29T20:29:24.36496188Z","logger":"argo-events.sensor","caller":"http/http.go:193","msg":"Making a http request...","sensorName":"kyverno-violation-sensor","triggerName":"trigger-testkube","triggerType":"HTTP","url":"http://api.testkube.io/organizations/tkcorg_b8ddc820d4919590/environments/tkcenv_94cb6305570f69bd/agent/test-workflows/smoke-nova/executions"}

{"level":"info","ts":"2025-08-29T20:29:26.956851778Z","logger":"argo-events.sensor","caller":"sensors/listener.go:449","msg":"Successfully processed trigger 'trigger-testkube'","sensorName":"kyverno-violation-sensor","triggerName":"trigger-testkube","triggerType":"HTTP","triggeredBy":["kyverno-violation-dep"],"triggeredByEvents":["c00639debca94e9bb850279134dfc5ef"]}The above logs show that an HTTP request was sent to the Testkube API successfully. You can also debug using the EventSource and Testkube API server logs to get an understanding of the complete workflow.

How to Get Started with Policy-as-Code in Kubernetes Testing

Start Simple

Begin with a small set of high-value policies that deliver immediate impact. Instead of covering everything at once, enforce basics such as image provenance (only signed or trusted registries), resource quotas (ensuring every Pod has CPU/memory requests), or DNS/networking limits (blocking external egress where not needed). These help teams see quick wins without overwhelming complexity.

Make Policies Observable

Policies are only effective if their outcomes are visible. Ensure all violations are logged and aggregated into existing observability stacks. For example, export Kyverno PolicyReports or Gatekeeper audit logs to Prometheus and surface them in Grafana dashboards. This not only highlights non-compliance trends but also provides early signals of drift in CI/CD pipelines.

Test Your Policies

Policies should be treated like code, meaning written, tested, and validated before rollout. Tools like Conftest or OPA unit tests let you validate logic locally. Run policies in audit mode first to see their impact without blocking deployments. For example, Kyverno allows policy creation in Audit mode, which will record policy violations. Kyverno’s kyverno test can also be used to simulate resource checks before enforcing. Once ready, the same policies can be deployed in Enforce mode to block resource creation/updation on policy violations.

Version Control & Peer Review

Store policies in Git alongside application manifests, Helm charts, or Kustomize overlays. This enables GitOps-driven workflows where policy changes go through the same peer review, CI checks, and approval gates as application code. By versioning policies, teams gain traceability and rollback safety when refining or extending their governance rules.

What’s Next for Policy-as-Code in Kubernetes Testing?

The future of Policy-as-Code is moving beyond static enforcement toward intelligent, adaptive, and automated governance in cloud-native systems.

- AI-assisted policy generation: LLMs can reduce the manual overhead of writing Rego or Kyverno policies by suggesting templates or generating policies from natural language requirements. For example, compliance documents like CIS benchmarks could be directly translated into Rego snippets.

- Supply chain security & SBOM enforcement: Policies will be used to enforce provenance, validate SBOMs, and block unsigned or vulnerable artifacts at admission time. This aligns with frameworks like SLSA and NIST 800-218.

- Cross-cluster drift detection: Policies will not only validate single clusters but also compare multiple environments (dev, staging, prod) for drift in security or configuration baselines, improving auditability and compliance across hybrid or multi-cloud setups.

Conclusion

Policy-as-Code plays an important role in Kubernetes because it brings consistency and repeatability to how clusters are managed. In a distributed environment, with multiple namespaces and teams pushing workloads, relying on manual checks or ad-hoc reviews is not reliable. Expressing policies as code allows us to enforce resource limits, security requirements, and compliance rules in a Kubernetes-native way. These rules are versioned, auditable, and can be applied consistently across clusters.

In the demo, we looked at a real-world scenario where a Deployment must request a minimum CPU value. If it goes below that threshold, the policy fails, and the violation is flagged during admission. To go a step further, we used Testkube to run tests and validate that reducing CPU did not break the application or cause unexpected behavior. This combination of policy enforcement and automated testing gives us a stronger way to manage resources without compromising reliability.

Get started with Testkube to integrate policy-driven testing into Kubernetes. Automate compliance checks, validate workloads against resource policies, and ensure application reliability with minimal overhead. Join the Testkube Slack community to start a conversation or read Testkube documentation to start building fault-tolerant, automated test pipelines tailored to the organisation’s infrastructure.

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.