Table of Contents

Try Testkube instantly in our sandbox. No setup needed.

Try Testkube instantly in our sandbox. No setup needed.

Table of Contents

Executive Summary

In dynamic Kubernetes environments, even minor changes to configuration or infrastructure resources can introduce unexpected behavior in applications. Identifying the effect of such changes manually is time-consuming and prone to error, especially at scale. Without automated detection and testing, these changes may go unnoticed until they cause issues in production.

Tools like Argo Events help capture Kubernetes resource lifecycle events, such as CREATE, UPDATE, or DELETE. It is capable of detecting and responding to a wide range of cluster events. These events can then be integrated into cloud-native test orchestration platforms like Testkube, allowing teams to automatically run tests that validate the impact of changes, ensuring faster feedback and safer deployments.

In this blog, we'll demonstrate setting up this event-driven testing workflow using real-world examples. You'll see how configuration changes to Kubernetes resources, such as ConfigMaps or Secrets, automatically trigger the relevant tests, making your deployment process more reliable and efficient.

Why Argo Events + Testkube Integration is Powerful

Event-Driven Infrastructure Testing

This integration creates a reactive testing ecosystem where Kubernetes resource modifications automatically trigger validation workflows. Argo Events uses EventSources to watch for specific Kubernetes API server events (ConfigMap updates, Secret rotations, Deployment changes). These events are then processed by Argo Events Sensors that can intelligently trigger a Testkube Workflow via the Testkube REST API that will execute a test.

Real-Time Feedback Loop with Advanced Orchestration

Unlike traditional CI/CD pipelines that test code changes, this approach validates infrastructure state changes as they occur in the cluster, ensuring that your changes are tested no matter how they are performed. Testkube's cloud-native architecture allows for distributed test execution across multiple environments simultaneously. The platform can run parallel test workflows—functional tests, performance benchmarks, security scans, and compliance checks—providing comprehensive validation within minutes of a configuration change.

GitOps-Native Workflow Integration

The integration seamlessly fits into GitOps methodologies by monitoring the actual applied state rather than just Git commits or Pull Requests. When ArgoCD or Flux applies manifests to the cluster, Argo Events detects the resulting resource changes and triggers Testkube workflows. This creates a complete feedback loop: Git commit → GitOps operator applies changes → Argo Events detects cluster state changes → Testkube validates the impact → Results feed back to development teams through Testkube Dashboard, Slack notifications, or any observability solution.

Testkube Integration with Argo Events

In this demo, we will monitor a Kubernetes ConfigMap for changes using Argo Events. When the ConfigMap is updated, a Sensor triggers a webhook to Testkube, which runs a predefined Test Workflow. This automated flow helps validate the impact of configuration changes instantly, ensuring application stability.

Prerequisites

- A Kubernetes cluster - we’re using a local Minikube cluster

- Helm installed

- kubectl installed

- Argo Events installed

- A Testkube account

- A Testkube Environment with the Testkube Agent is configured on the cluster.

- A Testkube API token generated using Testkube Dashboard.

Clone the testkube-example GitHub repository and change directory to `ArgoEvents` to get demo related configurations.

Steps to Deploy Argo Events with Testkube

For Argo Events to trigger test execution, we will first set up Argo Events on a cluster and configure it. Several Kubernetes resources must be configured as part of the integration:

- EventBus: Provides messaging infrastructure for Argo Events.

- ServiceAccount and RBAC: Enables necessary permissions for event monitoring and webhook execution.

- EventSource: Determines which events to monitor.

- Sensor: Processes events and generates webhooks for Testkube.

Step 1: Setting up Argo Events

- Create a dedicated namespace for Argo Events.

- Install Argo Events components using the latest version.

- Install with a Validating Admission Controller. This step improves the reliability and error-checking of custom resources like EventBus, Sensor, and EventSource.

- Deploy an `EventBus` using the default configuration provided in the `argo-events` examples.

- To make the Sensors be able to trigger Workflows, create a Service Account with RBAC settings as shown below:

Save the configuration in a file argo-events-sa.yaml and apply it to a cluster.

With this, the cluster is ready with Argo Events installed. We have configured a ServiceAccount and RBAC that will allow Argo Events to monitor a resource.

Step 2: Create a resource to monitor

We will now create a ConfigMap and deploy it on the cluster, which will be monitored for changes.

Save it in a file sample-configmap.yaml and apply it to a cluster.

In this ConfigMap, we have set a label watch: “true”, which will be used for filtering the ConfigMap on event occurrence.

Step 3: Create a Test Workflow in the Testkube Dashboard

In this step, verify that the Testkube Dashboard has a Test Workflow configured that will be triggered for execution when the resource update event happens.

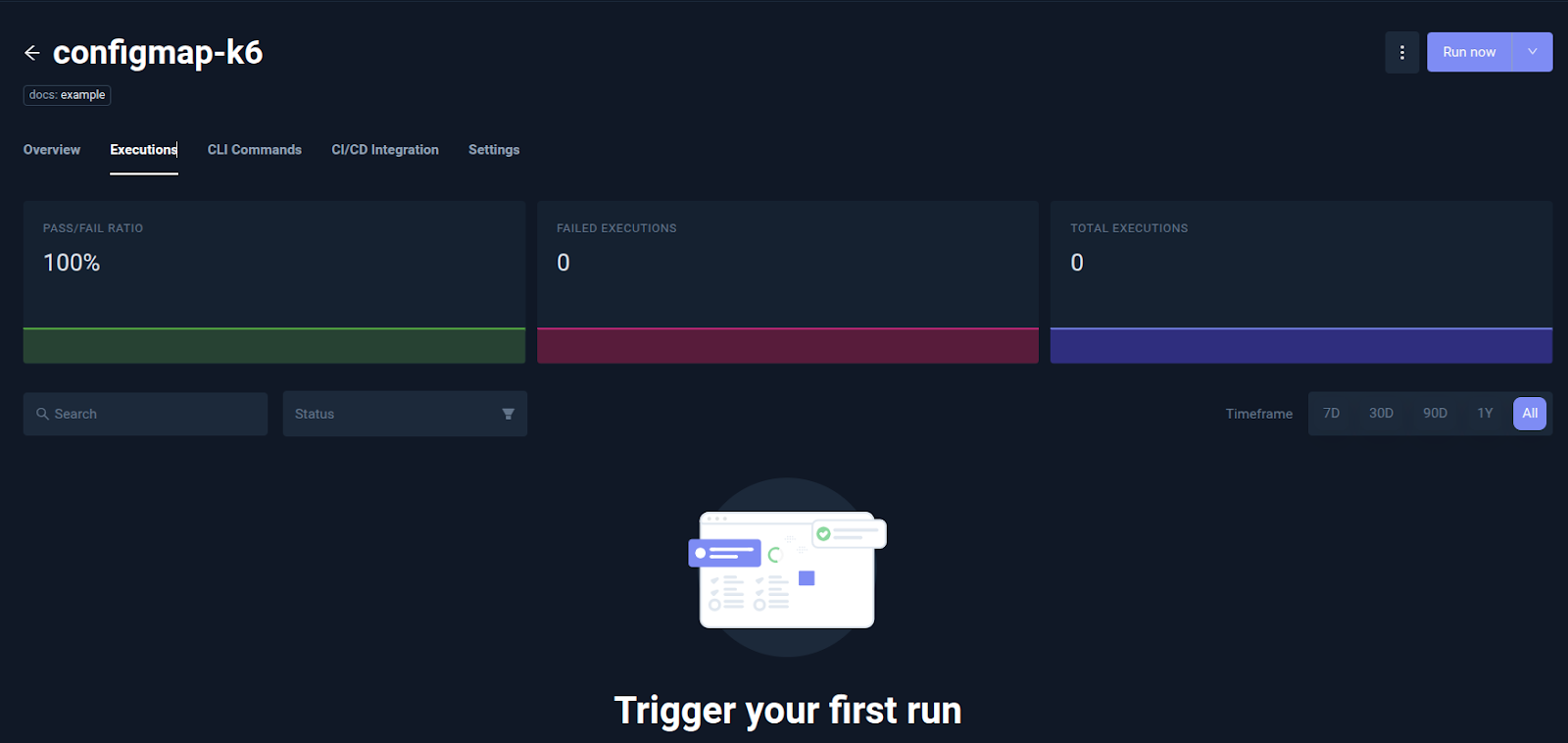

Here we have created a Test Workflow using the examples provided by Testkube. In this Test Workflow `configmap-k6`, a k6 test will execute and store the artifacts. You can perform test using any testing tool supported by Testkube or Bring your own Test(BYOT).

Step 4: Configure Argo Events with Testkube

We need an `EventSource` that will watch the resource like a ConfigMap for ADD, UPDATE, and DELETE events. Along with that, configure a Sensor that will trigger the Testkube workflow execution when the event happens.

1. Create an `EventSource` with the following configurations:

Save it in a file configmap-eventsource.yaml and apply it to a cluster.

In this EventSource, we have set a filter to check for Kubernetes resource ConfigMap in the default namespace that has label watch: “true” for events ADD, UPDATE, and DELETE.

2. Create a Sensor with the following configurations:

This will create a Sensor `configmap-webhook-sensor` which:

- Watches for: Events from an EventSource named configmap-eventsource where the event is named demo-configmap. It is defined in EventSource to check for a specific type of event for a ConfigMap.

- Filters: Only events where the ConfigMap has the label watch=true.

- Triggers: A webhook to Testkube when the above condition is met.

- `http.url`: Testkube webhook that queues the execution of Test Workflow. The name of the Test Workflow to be executed is passed in this webhook.

- `http.payload`: Sends the ConfigMap’s name as a tag field: "ArgoEventConfigMapUpdate": "<configmap-name>".

- `http.secureHeaders`: Pass the Testkube API token needed for authentication. Configure this as a secret using the following command.

Save it in a file and apply it to a cluster.

With the Sensor configured and connected to EventSource and Testkube webhook, the setup is now ready to respond automatically to ConfigMap changes in our cluster.

Step 5: Verification

Verify using the following command that the EventSource and Sensor are running:

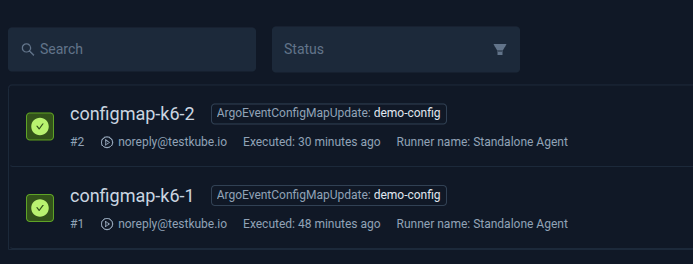

Update the ConfigMap and check the Testkube Dashboard for the execution of the TestWorkflow.

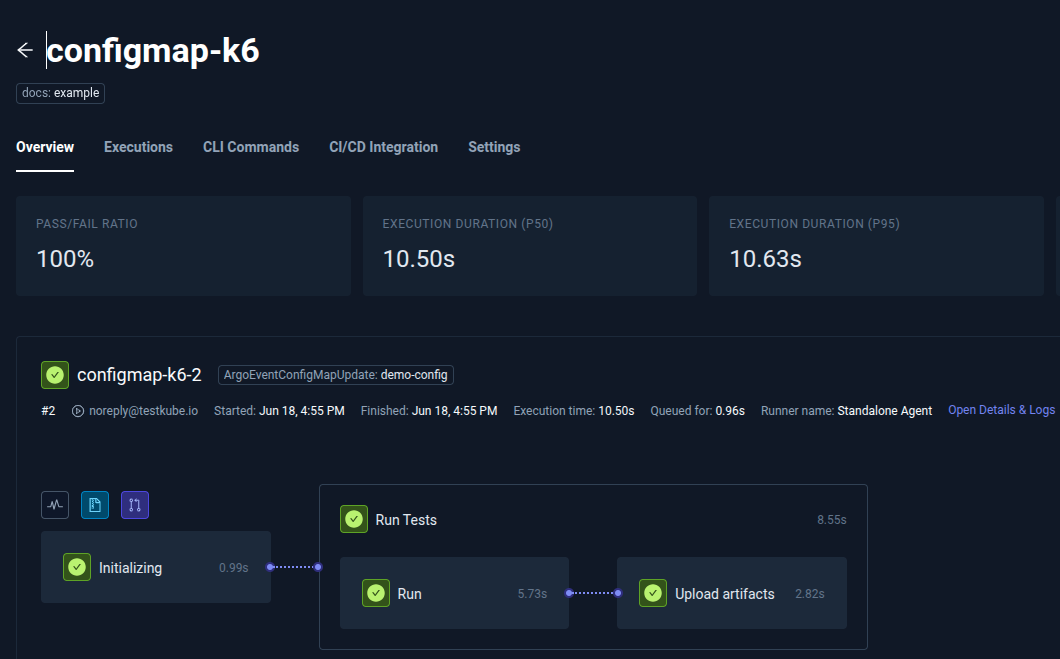

The execution of the Test Workflow is triggered and completed successfully.

We added a tag in the Sensor configuration to verify that the request is coming from ArgoEvent. Check the executions to see if the tag is added to it.

Tag `ArgoEventConfigMapUpdate` is added to the execution along with the name of the ConfigMap `demo-config`. Similarly, other values related to the resource for which the event has occurred, can be added as event type, timestamp of the event, and more.

For troubleshooting, you can check the Sensor logs to verify if the Testkube webhook was triggered, as shown below:

The above logs show the Testkube webhook triggered for the TestWorkflow `configmap-k6` and has been successfully processed.

Monitoring the Test Workflow using Testkube Dashboard

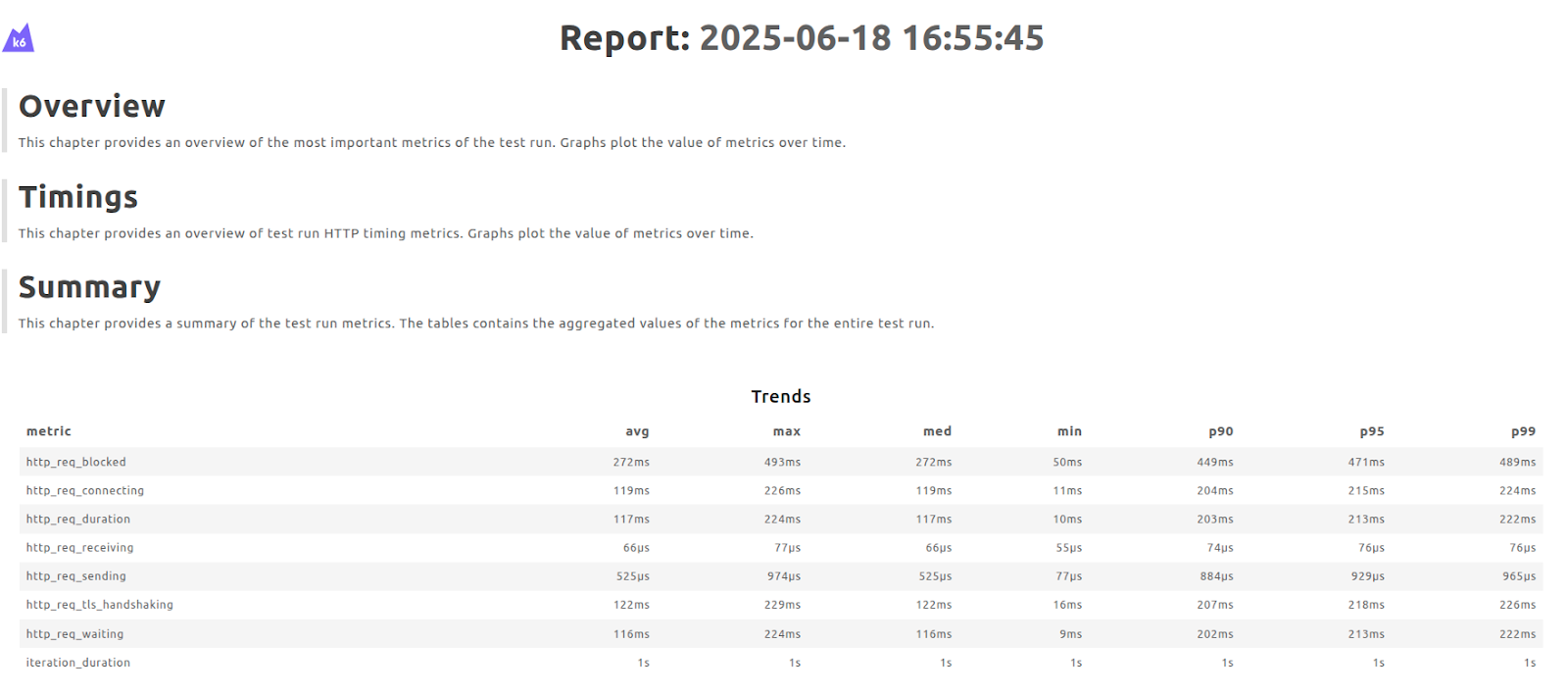

Testkube provides detailed execution logs that allow you to track the webhook execution status and generate reports. Let's look at how to monitor the workflow status of Test Workflow using the Dashboard:

Select the Test Workflows tab to view the `configmap-k6` and view the Log Output:

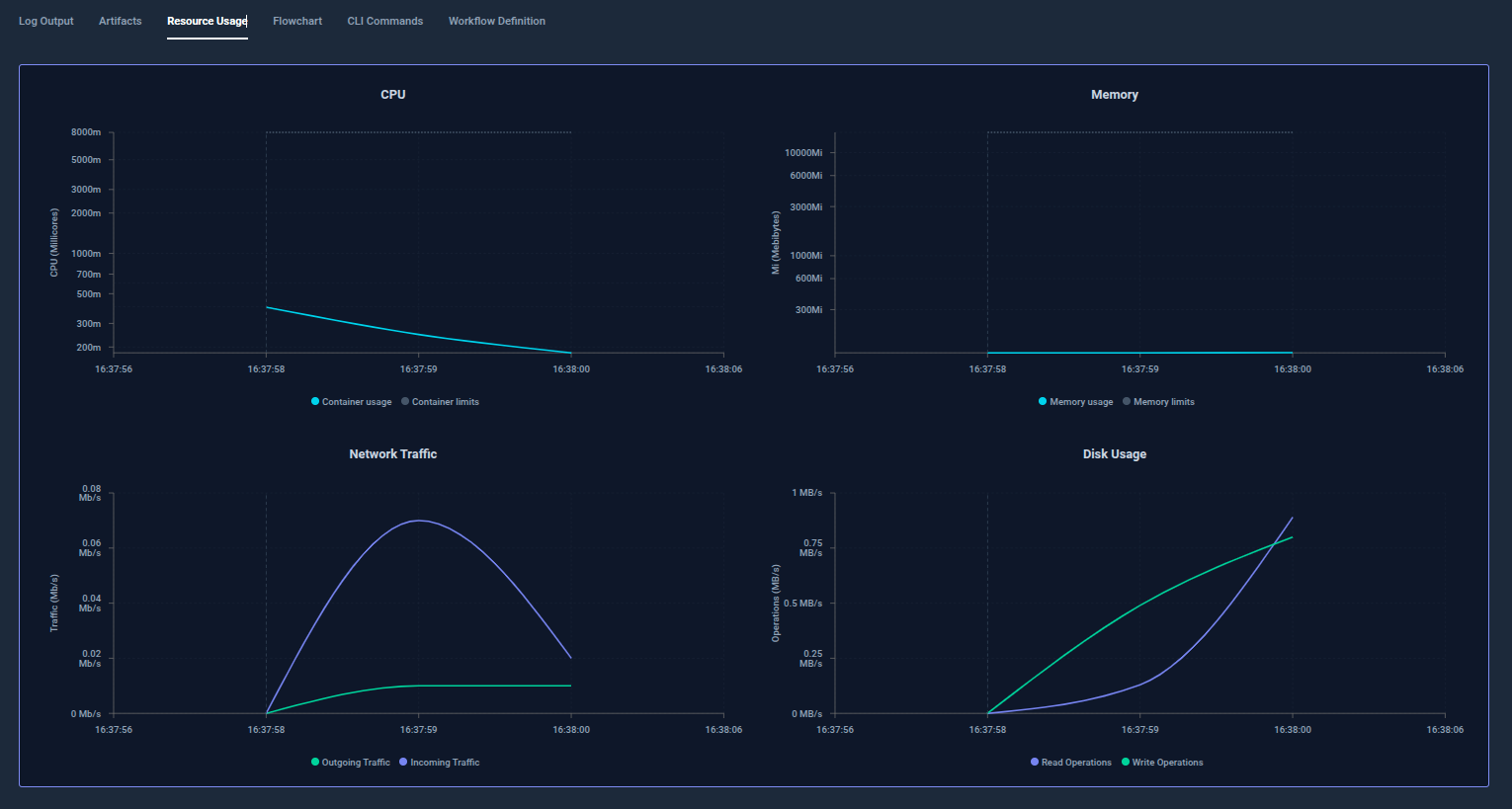

Testkube Dashboard gathers all the information related to the Test Workflow execution. It also provides you with the capability to process artifacts, track the resource usage for each test execution, compare the duration of execution of each test, and much more.

Integrating Argo Events with Testkube offers a powerful way to make your Kubernetes environment reactive, allowing you to trigger automated tests whenever critical resources like ConfigMaps are updated.

With Testkube, you can monitor detailed test execution metrics, including CPU, memory, network traffic and disk usage across each test run. This visibility helps optimize test performance, troubleshoot bottlenecks, and right-size your testing infrastructure in Kubernetes environments.

In this setup, Argo Events listens for changes (add/update/delete) on specific Kubernetes resources using precise label filters. When a matching event occurs, a webhook is triggered to Testkube, which then runs tests to validate whether the change introduces any regressions or issues.

Get started with Testkube now to implement this approach that provides a GitOps-friendly, event-driven testing pipeline. It bridges the gap between infrastructure changes and application reliability, making it easier to catch issues early, automate responses to changes, and ensure confidence in every deployment. By adopting this approach, teams can shift left on reliability, catch regressions earlier, and scale testing without scaling complexity.

Join the Testkube Slack community to start a conversation or read Testkube documentation to start building fault-tolerant, automated test pipelines tailored to the organisation’s infrastructure.

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.

.jpg)