Cross-System Root Cause Analysis

Table of Contents

Table of Contents

Give AI Agents access to your full ecosystem: source code, infrastructure, and observability tools, for deeper, more accurate failure analysis.

The problem

Test failures don't happen in isolation. A failing API test might trace back to a recent code change. A flaky end-to-end test might stem from infrastructure instability. A sudden spike in failures could correlate with a deployment or configuration update.

The issue is most troubleshooting happens in silos. Engineers check test logs in one tool, pull up recent commits in another, review infrastructure state in a third. Piecing together the full picture requires jumping between systems, manually correlating timestamps, and holding context in your head.

This fragmented workflow slows down root cause analysis and increases the chance of missing the real issue. When the data needed to explain a failure lives across GitHub, Datadog, and your test platform, no single tool can connect the dots on its own.

The solution

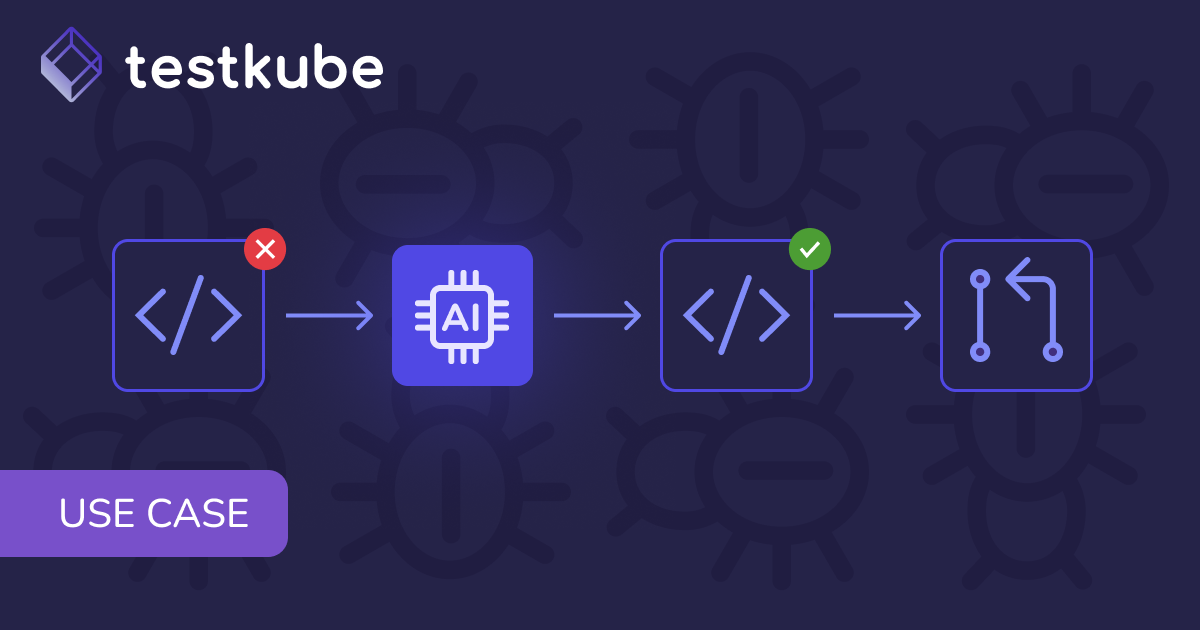

Testkube's AI Agent Framework lets agents pull context from external systems via MCP servers. This means your troubleshooting agents can access source code changes, infrastructure state, observability data, and more, all while analyzing test failures.

Instead of just examining logs, agents can correlate failures with recent commits, check Kubernetes cluster health, or pull metrics from your monitoring stack. The result is faster, more accurate analysis grounded in the full context of what's happening across your environment.

Key capabilities

- Connect agents to GitHub, GitLab, or other source control via MCP servers

- Pull infrastructure context from Kubernetes clusters

- Access observability data from tools like Datadog or Prometheus

- Correlate test failures with code changes, deployments, and environment state

- Notify teams via Slack or create issues in Jira based on findings

How it works

- Connect external MCP servers to Testkube (GitHub, Kubernetes, observability tools, etc.)

- Configure your agent with access to the tools it needs and a prompt tailored to your workflow

- Run the agent against a failed execution and let it pull context from connected systems

- Review enriched analysis that correlates test failures with code changes, infrastructure events, and historical patterns

- Take action directly, or configure the agent to create Jira tickets or post to Slack automatically

For example: a flakiness analysis agent can examine failed test logs, then check your GitHub repository for recent changes to the test code itself. If it finds a commit that modified the failing test, it surfaces that correlation and explains how the change might be causing instability.

Outcomes

- Get root cause faster by correlating failures with code and infrastructure changes automatically

- Eliminate tool-hopping by letting agents pull context from across your stack

- Detect flakiness earlier by cross-referencing test changes with failure patterns

- Keep teams informed with automated notifications to Slack or ticket creation in Jira

What makes this different

Standalone AI tools don't have access to your internal systems. Copying logs into ChatGPT loses the execution context and can't correlate with live data from GitHub or Kubernetes. Testkube agents connect directly to your ecosystem via MCP, grounding analysis in real, current data from across your environment. The more context you give them, the better they get.

.png)