Advanced Troubleshooting & Failure Analysis

Table of Contents

Table of Contents

Stop digging through logs manually and let AI Agents aggregate signals, identify patterns, and surface root causes in seconds.

The problem

When a test fails, finding out why is rarely straightforward. Engineers open the execution logs, scroll through hundreds of lines, cross-reference previous runs, and try to piece together what went wrong. For complex failures, this means pulling in logs from the system under test, checking environment configurations, and comparing against historical patterns.

This process eats up time. A single failure investigation can take 10 to 30 minutes depending on complexity. Multiply that across dozens of daily failures and the drain on engineering productivity becomes significant. Context switching alone disrupts focus and slows down the work that actually moves releases forward.

The bigger problem is that CI/CD pipelines can execute tests, but they can't reason about results. They surface pass/fail status without the context needed to act. Teams compensate by building scripts and manual runbooks, but these approaches don't scale as test suites grow and environments multiply.

The solution

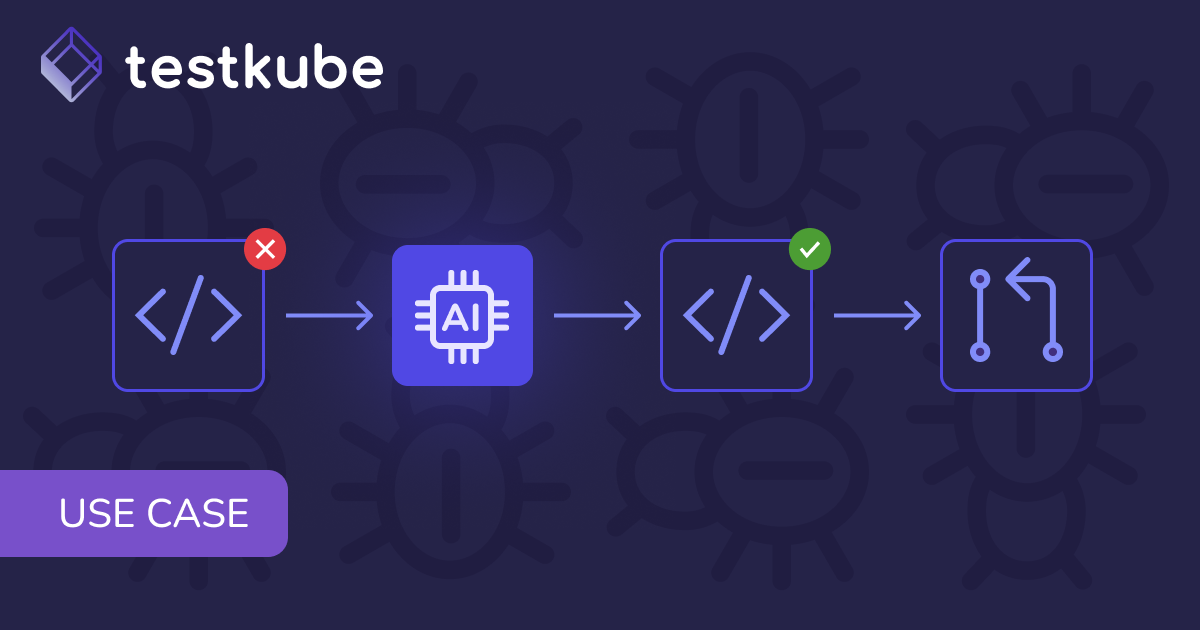

Testkube's AI Agent Framework enables agents that analyze test failures automatically. Instead of manually sifting through data, you can task an agent to examine logs, artifacts, execution history, and environment context, then deliver a clear summary of what went wrong and why.

These agents run directly on the Testkube platform, with native access to your test workflows, execution metadata, and historical results. They reason over the data, identify patterns across runs, and surface insights that would take humans much longer to find.

Key capabilities

- Analyze logs and artifacts from failed executions automatically

- Compare failures against previous runs to detect patterns and flakiness

- Aggregate signals across environments, clusters, and configurations

- Deliver plain-language summaries with actionable findings

- Support follow-up questions for deeper investigation

How it works

- Navigate to a failed execution in Testkube and click "AI Analyze"

- Select your troubleshooting agent (configured with your preferred prompt and tool access)

- The agent investigates by pulling logs, artifacts, and execution history

- Review the analysis with a summary of findings, error patterns, and likely causes

- Ask follow-up questions to dig deeper or request additional analysis

The agent works interactively. If initial findings are inconclusive, you can prompt it to examine more executions, look at specific time ranges, or correlate failures with external factors. It's like having a senior engineer on call who already has full context on your test infrastructure.

Outcomes

- Cut investigation time from 30 minutes to under 5 for most failures

- Reduce context switching by getting answers without leaving Testkube

- Catch flaky tests faster by correlating failures across runs automatically

- Free up engineering time for higher-value work instead of log spelunking

What makes this different

Generic AI tools require you to copy-paste logs and explain your setup. IDE-based assistants don't have access to execution history or cluster-scale data. Testkube agents are grounded in your actual test infrastructure, with native access to workflows, results, and artifacts. They reason over real data, not summaries you feed them.

.png)