Table of Contents

Try Testkube instantly in our sandbox. No setup needed.

Try Testkube instantly in our sandbox. No setup needed.

Table of Contents

Executive Summary

A developer on your team used Cursor to build an event handler for your message queue. Unit tests confirmed the message processing logic works. Integration tests verified it talks to Kafka correctly. You deploy to Kubernetes, and within hours, your queue backs up - transactions start failing because the handler can't keep pace with production traffic.

Why did this happen? Unit tests validated the processing logic worked in isolation. Integration tests confirmed it could communicate with Kafka. But neither of them tested how the system actually functions when real traffic hits: whether the handler can process messages fast enough given your pod resource limits, network latency between Kubernetes and Kafka, or the actual message volume your production system generates.

AI optimized the code for local correctness. It had no visibility into your distributed system with your specific constraints

This is the fundamental gap with AI-generated code: it passes the tests we've always relied on, while introducing failures at a level we've rarely had to test systematically.

The AI Code Problem: When Copilot Can't See Your Infrastructure

AI code generation tools have a fundamental limitation: they optimize for writing correct code in isolation, without understanding the platform that code will run on, the services they will interact with or optimize it for issues the app may face in production.

This creates a specific set of failure modes that traditional testing wasn't designed to catch.

AI Generates Code Without System Context

When you ask Cursor to generate an event handler, it produces a clean code in Go - following all the best practices with proper error handling, clear structure and helpful comments. What it doesn’t quite understand is your system’s context - resources quotas of your clusters, mandatory labels, networking policies or organization specific security contexts.

The logic is correct. The code compiles. Unit tests pass. But deploy it to your cluster, and it violates resource limits, fails RBAC checks, or isn’t able to communicate with other services because the AI assumed standard Kubernetes networking, not your specific CNI implementation.

Without the context, the generated code is technically correct but operationally incompatible.

Hallucinated Infrastructure Assumptions

One of the most common failures among AI-generate code is the assumptions it makes. Cursor assumes your S3 bucket exists and generates a completely valid code using the AWS SDK. The unit tests pass because you’re using a mock replacement for S3 bucket. Deploy to production, and the application crashes - the bucket never existed!

Such assumptions lead to failures due to version mismatches; incompatible extensions deployed in production. Unfortunately, unit tests can’t catch these. They can’t validate the infrastructure that doesn’t exist (or what the AI assumes exists!)

Multi-step reasoning breaks at integration points

Most AI tools chain together API calls that individually work but violate your specific distributed transaction requirements. Consider an AI-generated payment workflow: it queries inventory, reserves stock, charges the card, and updates the order - each step perfectly valid in isolation.

Deploy it to production, and it breaks. AI assumed synchronous responses when your system uses asynchronous messaging. The individual functions pass unit tests. Integration tests with mocked services pass. The workflow fails when deployed to your actual infrastructure where services communicate through event buses and message queues with unpredictable latency.

Why Unit Tests Aren't Enough For AI-Generated Code

Unit tests excel at catching logical errors, edge cases and incorrect implementations. However, they struggle with AI-generated code because often, AI-generate code fails at infrastructure or integration layer rather than the logic layer - unless you have specific business rules that AI isn’t aware of.

Mocking Hides Real Infrastructure Constraints

AI generates code assuming default Kubernetes behavior and best practices. But your cluster isn't default - it has organization-specific constraints, security policies, and operational requirements that don't exist in AI's training data or in your mocked test environment.

For instance, Cursor can generate a Kubernetes deployment YAML that passes all validation tests. Your unit test mocks this Kubernetes API and confirms that this YAML is valid and verifies that all resources specified are correct. But when you deploy it to your cluster, it fails.

Mocks can’t simulate your actual system’s policies. They can validate that the manifest was syntactically correct, but they can’t validate network policies that restrict pod communication, security contexts your organization requires.

Missing Cloud Provider Context

AI is trained on generic documentation, not your specific infrastructure. Your infrastructure has cloud provider customizations, specific versions, and operational constraints that only exist in production. Unit tests can't simulate what they don't know about.

AI generated database migration script work perfectly fine against a SQLite in-memory and your unit tests validate the SQL syntax is correct. However, your actual platform runs Amazon RDS with custom parameter groups, specific connection pool limits, and read replicas with replication lag.

You deploy this to production, and it locks tables for 30 minutes and times out. The SQL was correct. The logic was sound. What unit tests couldn't simulate was the cloud provider-specific behaviors, API rate limits, or infrastructure configurations that differ from vanilla PostgreSQL.

Configuration Drift Silently Breaks AI Code

AI generates code against specifications from its training data. But your production runs on infrastructure that has drifted from those specifications. Unfortunately, unit tests cannot ‘see’ the configuration. They check code against a specification, not against reality.

For example, you used Cursor to generate a Terraform module. It generates the module based on AWS provider v4.x documentation it was trained on. Your unit test runs terraform validate. However, your platform runs provider v5.x with breaking changes in IAM policy structure.

You push this to production, and it fails with errors about policy format. Unit tests validated correctness against the documented API. But they didn't validate correctness against your actual platform state.

System-Level Testing: What AI Couldn't See About Your Platform

Unit tests validate the logic; integration tests validate communication, and system-level tests validate the reality.

System testing runs code in real Kubernetes clusters with actual service meshes, CNI plugins, and CSI configurations. It validates against live cloud provider APIs, not localhost mocks. It tests with production-like networking that includes real latency, firewall rules, and DNS resolution complexity.

What system tests catch that unit tests miss:

- Cross-service authentication flows breaking due to misconfigured service accounts

- Network policies blocking traffic that "should work" according to code logic

- Resource limits causing OOMKills under realistic load

- Cloud provider rate limits and quota exhaustions that only appear at scale

Imagine a scenario where AI generated a Kubernetes CronJob for data replication. Unit tests confirmed the job syntax and the existence of the container image. Everything passes. You deploy it to your cluster, and the job fails repeatedly.

Turns out, there were insufficient RBAC permissions to access the API and storage class configurations that disallowed write on the PersistentVolumeClaim. The code was correct. The infrastructure assumptions were wrong.

This is what makes system-level testing essential for AI-generated code: it validates the assumptions AI made about your infrastructure. Assumptions that were invisible to unit tests because they test in isolation.

Platform-Level Governance: Making AI Code Production-Safe

Catching AI-generated code failures isn’t enough; you need to prevent them from reaching production. This means, your platform teams need to put in guardrails, and ensure safe code reaches production without affecting the development velocity.

Policy-as-Code for AI-Generated Infrastructure

Platform teams can enforce organizational standards on all AI-generated infrastructure code automatically. When AI generates Terraform, Kubernetes manifests, or Helm charts, policy engines like Kyverno validate them against your organization's rules before they're deployed.

For example: AI generates a Terraform code that provisions an S3 bucket. Kyverno policies check: Does it have mandatory tags for cost allocation? Is encryption enabled? Does it follow naming conventions? Are lifecycle policies configured? If the code violates any policy, it fails CI before even before a human review.

This works because policies encode your platform's requirements. AI doesn't know your tagging standards, security baselines, or compliance requirements. Policy-as-code catches these gaps automatically, turning implicit platform knowledge into explicit, enforceable rules.

Environment Parity as a Platform Service

The "works on my machine" problem, which was solved by containers, multiplies with AI code. Developers generate code against generic examples, test locally with mocked dependencies and services, then deploy to infrastructure that doesn’t mirror production.

Platform teams can solve this by providing ephemeral test environments that mirror production. Developers can validate AI-generated code against actual infrastructure -same Istio configuration, same network policies, same resource quotas as production. These environments are automatically kept in sync with production via GitOps, eliminating configuration drift.

This platform engineering approach to testing treats test infrastructure as a first-class service. Instead of each team building their own testing setup, the platform provides consistent environments where AI code can be validated against real infrastructure before it ships.

Tracking AI Code Quality Across Teams

Platform teams need visibility and understand AI-generated code better: What percentage of AI-generated code passes system tests on first try? Which AI tools consistently produce code that requires rework? Which teams are shipping untested AI code to production?

You cannot measure what you cannot track and without metrics, you can't improve. Platform teams can establish quality gates that AI-generated code must pass. These can ensure that high-risk changes - authentication services, data pipelines, API gateways - don’t go to production without comprehensive checks.

By adding this visibility, your platform teams can understand more about how your team is using AI: does AI fail more often with networking code than storage? Are specific cloud providers more problematic? These insights help platform teams refine their guardrails and guide developers toward better AI usage patterns.

How Testkube Enables System-Level Testing for AI Platform Code

To validate AI-generated code at system-level, platform teams need testing infrastructure that can validate the code in production-like environment. Testkube provides that orchestration layer which makes system-level validation practical at scale, without forcing teams to abandon their existing testing tools or workflows.

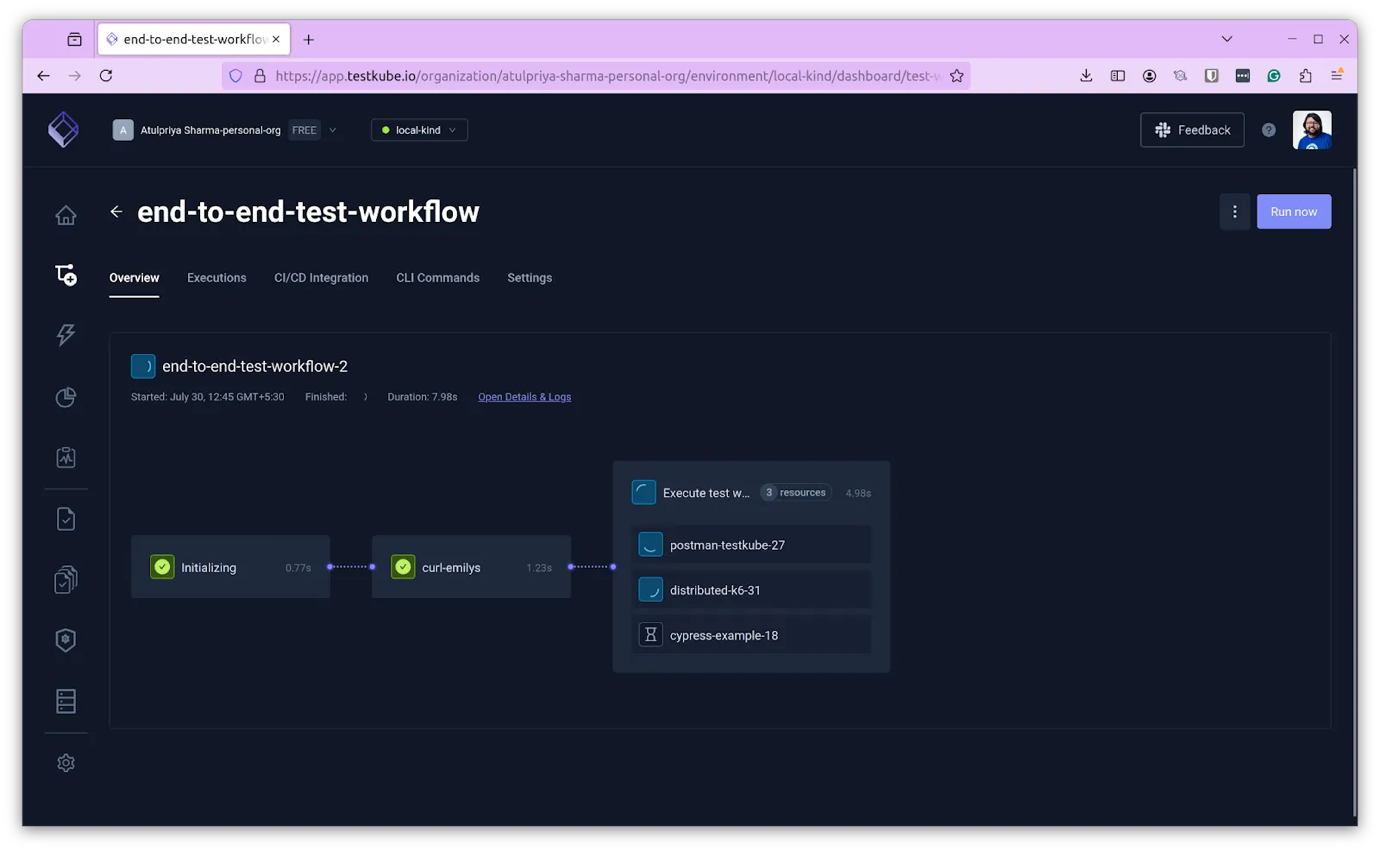

Kubernetes-Native Testing Where AI Code Actually Runs

Testkube runs tests directly inside your Kubernetes clusters using Test Workflows, ensuring your code is tested in an environment that mirrors production. When AI generates a Helm chart or Kubernetes operator, Testkube executes tests that validate against your actual CNI plugin, storage classes, network policies, and security contexts.

Tests interact with real cluster components. This catches infrastructure assumptions AI makes that are wrong for your specific setup - like resource quotas, RBAC permissions, or service mesh configurations that unit tests simulate away.

Orchestrate Multi-Step Validation That Matches AI Code Complexity

AI doesn't just generate single functions - it generates complete workflows: data pipelines touching multiple services, authentication flows spanning APIs, infrastructure deployments with complex dependencies. Testing this requires coordination that traditional CI/CD struggles with.

Testkube's workflow orchestration handles this natively. Define test workflows that mirror your platform: validate AI-generated Terraform applies correctly, check the deployed service responds to health checks, run integration tests against dependent APIs, verify metrics flow to your observability stack. These steps can run sequentially or in parallel based on your dependency graph.

This can be extended further using Testkube's MCP Server integration which allows teams to ‘converse’ with their code and gives the ability to generate, orchestrate and even debug TestWorkflows that validate complex AI generated code - all without leaving their IDEs.

Make AI Code Quality Visible Across Your Platform

Understanding AI code quality patterns requires observability. Which AI tools consistently generate code that breaks system tests? Which teams ship untested AI code? What percentage of AI-generated infrastructure changes need rework after validation?

Testkube provides this visibility. Every test execution produces centralized logs and artifacts showing exactly where AI code failed - RBAC permissions, network policies, resource quotas, or service mesh configuration.

This enables data-driven decisions. Maybe AI-generated Terraform requires extra validation gates. Certain code generation prompts need refinement. This helps make testing as a platform-level capability.

Where Platform Leads Should Start

Implementing a system-level testing for AI doesn’t require you to make drastic changes in your existing pipelines, toolset or processes. You need to start small, show value and scale. Here are some practical steps to get you started.

Risk-Based Prioritization

Not all AI-generated code fail. Look for code that has the highest blast radius - authentication/session related services, API gateways or infrastructure as code templates that provision cloud services.

Focus on integration points, they are the points of failure. Team A generates code assuming Team B service’s behaves in a certain way, but they’ve not see how Team B’s code is implemented. This is where AI's limited context causes the most problems.

If teams are using AI to service mesh configurations or storage access policies, ensure you do a through system test to validate these.

Quick Win: One System-Level Test

Navigate through your codebase and pick your riskiest AI-generated service. Add one system-level test that validates actual cluster deployment with production-like configuration.

Track what this test catches that unit tests missed. Document it. Build a business case: "This test prevented an outage that would have cost X hours of downtime. It caught a configuration mismatch that unit tests passed. It reduced our MTTR by Y% because we caught the issue before production."

This single system test becomes your proof point to get buy-in from the engineering leadership. This is a concrete evidence that system-level testing catches AI-specific failures. Use it to justify broader investment in testing infrastructure.

Platform Capability Development

Once you've proven value, it’s time to scale your efforts. Establish standard testing patterns for AI-generated code across your organization. Create reusable test workflows teams can adopt.

For example:

- Terraform validation workflow that checks provider version compatibility, applies to test environment, validates resources.

- Kubernetes operator workflow that deploys to test cluster, validates RBAC, checks resource consumption.

- Service deployment workflow that validates networking, authentication, and observability integration.

Integrate system testing into your platform's CI/CD guardrails. Use automated test triggering based on Kubernetes events to trigger specific TestWorkflows when AI-generated code gets deployed. Make the process automatic.

Conclusion

AI generates code faster than ever, but it generates that code without seeing your actual platform - your specific configurations, organizational policies, and constraints. Unit tests were designed to validate logic in isolation, not infrastructure integration at scale.

System-level testing bridges this gap. It validates AI-generated code against the infrastructure it will actually run on, enabling you to catch the assumptions AI makes that unit tests can't see.

Testkube makes this possible by running tests directly in your Kubernetes clusters, orchestrating complex validation workflows, and providing the observability platform teams need to track AI code quality across the organization.

Ready to validate AI-generated code in your actual Kubernetes environment? Try Testkube and see how you can implement effective system-level testing.

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.