Table of Contents

Try Testkube instantly in our sandbox. No setup needed.

Try Testkube instantly in our sandbox. No setup needed.

Table of Contents

Executive Summary

The best proof that a tool works? When your own engineers reach for it without being asked. That's exactly what happened when Catalin H, Senior Full Stack & AI Systems Engineer at Testkube, faced a development challenge and instinctively turned to our AI Assistant.

The Challenge: Building Workflows That Fail on Purpose

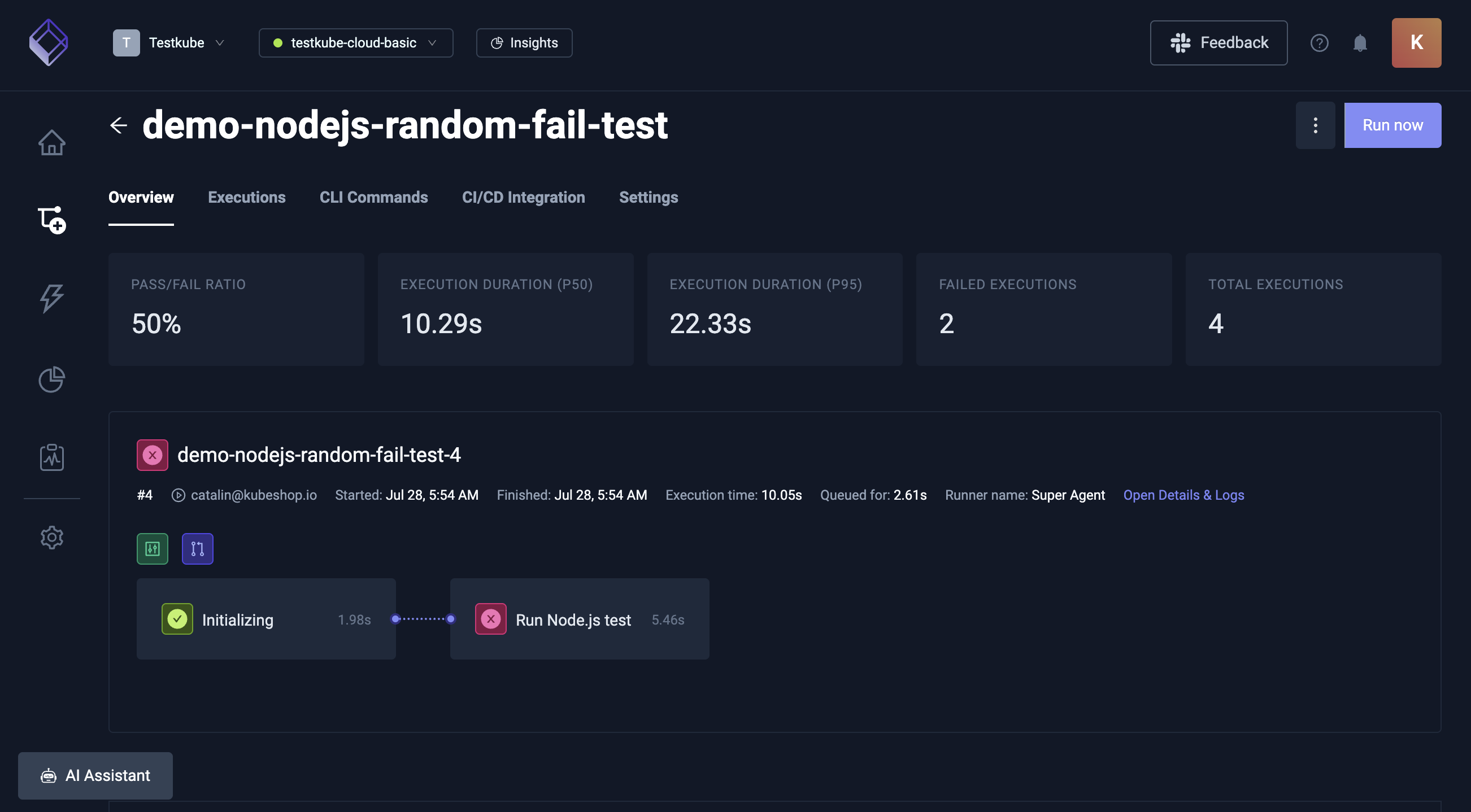

The Testkube team was implementing a workflow health feature that displays weather icons next to workflow names, indicating their status. While they had plenty of healthy, green workflows running at 100% success, they needed something different for testing: workflows that would fail randomly to simulate real-world flaky behavior.

"For my testing, I needed some workflows that were flaky, that failed randomly, so I can see different versions of that health," Catalin explains in his demonstration. Rather than spending time manually crafting these specialized workflows, he decided to explore how our AI Assistant could accelerate the process.

The AI-Powered Development Process

Starting with the Right Questions

The key to success with AI assistance lies in asking the right questions and building context incrementally. Catalin began with a specific constraint: "I wanted to run this with a single YAML, I don't want to write another test in another language on a GitHub repository and have to check out and take more time for this workflow."

His first query to the AI Assistant was straightforward: Could the content of a Node.js test file be written directly into the YAML workflow file? Within seconds, the AI Assistant confirmed this was possible using the content field in YAML and provided the technical foundation needed to proceed.

From Concept to Working Code

With the basic approach validated, the next step was implementation. Catalin asked the AI Assistant to "write the YAML for a workflow that fails randomly, use Node.js and write the code of the test as an inline content file."

The AI Assistant delivered a complete solution that included:

- A properly structured YAML configuration with a "demo" prefix for easy identification

- Inline Node.js code using Math.random() to generate random pass/fail behavior

- Correct syntax for embedding code directly in the workflow specification

"The name we can see has the demo prefix correctly and we can see it uses the spec content files, the syntax looks correctly," Catalin noted while reviewing the generated code.

Here's the generated YAML:

kind: TestWorkflow

apiVersion: testworkflows.testkube.io/v1

metadata:

name: demo-nodejs-random-fail-test

spec:

config:

forcedResult:

type: string

default: ""

content:

files:

- path: /test.js

content: |

const forcedResult = process.env.FORCED_RESULT || "{{ config.forcedResult }}";

if (forcedResult) {

console.log(`Forced result: ${forcedResult}`);

process.exit(forcedResult === 'pass' ? 0 : 1);

} else {

const randomFail = Math.random() > 0.5;

console.log(`Random result: ${randomFail ? 'fail' : 'pass'}`);

process.exit(randomFail ? 1 : 0);

}

steps:

- name: Run Node.js test

container:

image: node:14

env:

- name: FORCED_RESULT

value: '{{ config.forcedResult }}'

shell: node /test.jsIterative Improvements Through Conversation

The real power of the AI Assistant became apparent in the iterative refinement process. Recognizing that purely random behavior would be difficult to test reliably, Catalin requested an enhancement: adding an environment variable to force specific results.

The AI Assistant seamlessly integrated this requirement, updating both the Node.js code to read from process.env and the YAML configuration to include the environment variable with a default value. This allowed for both random behavior and controlled workflow scenarios.

Discovering Advanced Features

"Testkube allows you to pass the runtime and you can parameterize," Catalin explained after the AI Assistant suggested the feature, then asked it to implement the solution using spec.config parameters. This allowed the workflow to accept runtime values through the UI, eliminating the need for manual YAML edits.

The Results: From Idea to Implementation in Minutes

The entire process from initial concept to fully functional, parameterized workflow took just a few minutes of conversation with the AI Assistant. The final solution included:

- A complete YAML workflow configuration

- Inline Node.js code for random failure simulation

- Environment variable support for controlled workflow behavior

- Runtime parameterization for UI-based control

- Proper error handling and execution flow

"This is one way I've been using the copilot. It really works best when you ask some questions, build a bit of context, and try to learn about the Testkube features as the copilot also guides you with that and gives you context," Catalin observed.

Beyond Workflow Creation: Other AI Assistant Use Cases

Catalin's workflow creation example represents just one facet of how Testkube's AI Assistant can enhance your testing operations. Here are additional ways teams are leveraging Testkube’s AI Assistant:

- Log Analysis and Debugging: When workflow executions fail, the AI Assistant can quickly analyze log outputs and provide insights. Simply ask questions like "why did this execution fail?" or "write a short summary of what failed" to get detailed diagnostic information without manually parsing through lengthy log files.

- Dashboard Navigation and Discovery: The AI Assistant serves as an intelligent guide through the Testkube Dashboard, helping users quickly locate specific features and pages. Whether you need to "create an API token" or "check the audit log," the AI Assistant provides direct links to relevant pages, eliminating navigation guesswork.

- Workflow Search and Filtering: Complex queries become simple with natural language processing. Ask the AI Assistant to "find all workflows that failed" or "find all successful cypress workflows and failed postman workflows," and it will navigate you to the appropriate pages with filters pre-applied.

- YAML Configuration Assistance: Beyond creating workflows from scratch, the AI Assistant excels at helping with specific configuration challenges. Whether you need examples of service configurations, worker settings, or parallelism setups, the AI Assistant can provide tailored examples and explanations.

- Real-Time Troubleshooting: The AI Assistant's deep integration with the Testkube ecosystem means it can access real-time data from your Control Plane, providing current insights rather than generic advice. This contextual awareness makes troubleshooting more effective and recommendations more relevant.

The Collaborative Advantage

What sets Testkube's AI Assistant apart from generic chatbots is its collaborative approach to problem-solving. Rather than simply answering isolated questions, it maintains context throughout conversations, anticipates follow-up needs, and proactively suggests improvements.

"You can collaborate with it to get to the desired results," Catalin noted, highlighting how the AI Assistant becomes a true development partner rather than just a question-answering tool.

Watch Catalin's Full Demonstration

Want to see this entire process in action? Watch Catalin's detailed walkthrough where he collaborates with the AI Assistant in real-time to build these flaky workflows from scratch.

Getting Started with AI-Powered Workflow Development

The success of Catalin's workflow creation example demonstrates the potential for AI to transform how we approach testing challenges. By combining domain-specific knowledge with conversational AI, complex tasks become accessible to developers regardless of their familiarity with specific testing frameworks or configurations.

Whether you're (in this case) building flaky workflows or debugging failed executions, the AI Assistant provides intelligent guidance that adapts to your specific needs and context.

Enable the Testkube AI Assistant in your organization settings and discover how conversational AI can streamline your development today.

About Testkube

Testkube is a cloud-native continuous testing platform for Kubernetes. It runs tests directly in your clusters, works with any CI/CD system, and supports every testing tool your team uses. By removing CI/CD bottlenecks, Testkube helps teams ship faster with confidence.

Explore the sandbox to see Testkube in action.